Quest 3 developers can now integrate three major new features to improve both virtual and mixed reality.

Inside-Out Body Tracking (IOBT) and Generative Legs were announced alongside Quest 3 as coming in December. Occlusion for mixed reality via the Depth API was previously an experimental feature, meaning developers could test it but couldn't ship it in Quest Store or App Lab builds.

All three features are now available as part of the v60 SDK for Unity and native code. Meanwhile, the v60 Unreal Engine integration includes Depth API, but not IOBT or Generative Legs.

Inside-Out Upper Body Tracking

Inside-Out Body Tracking (IOBT) uses Quest 3's side cameras, which face downward, to track your wrist, elbows, shoulder, and torso using advanced computer vision algorithms.

IOBT prevents the issues with inverse kinematics (IK) estimated arms, which are often incorrect and feel uncomfortable because the system is just making guesses from the location of your head and hands. When developers integrate IOBT, you'll see your arms and toro in their actual positions - not an estimate.

This upper body tracking also lets developers anchor thumbstick locomotion to your body direction, not just your head or hands, and you can perform new actions like lean over a ledge and have this show realistically on your avatar.

AI Generative Legs

IOBT only works for your upper body. For your lower body, Meta has launched Generative Legs.

Generative Legs uses a "cutting edge" AI model to estimate your legs positions - a technology the company has been researching for years.

On Quest 3, Generative Legs delivers a more realistic estimate than previous headsets thanks to using the upper-body tracking as input, but it also works on Quest Pro and Quest 2 using only head and hands.

The Generative Legs system is just an estimator though, not tracking, so while it can detect jumping and crouching, it won't pick up many actual movements like raising your knees.

Together, inside-out upper body tracking and Generative Legs enable plausible full bodies in VR, called Full Body Synthesis, with no external hardware.

Meta previously announced that Full Body Synthesis will come to Supernatural, Swordsman VR, and Drunken Bar Fight.

Depth API For Occlusion In Mixed Reality

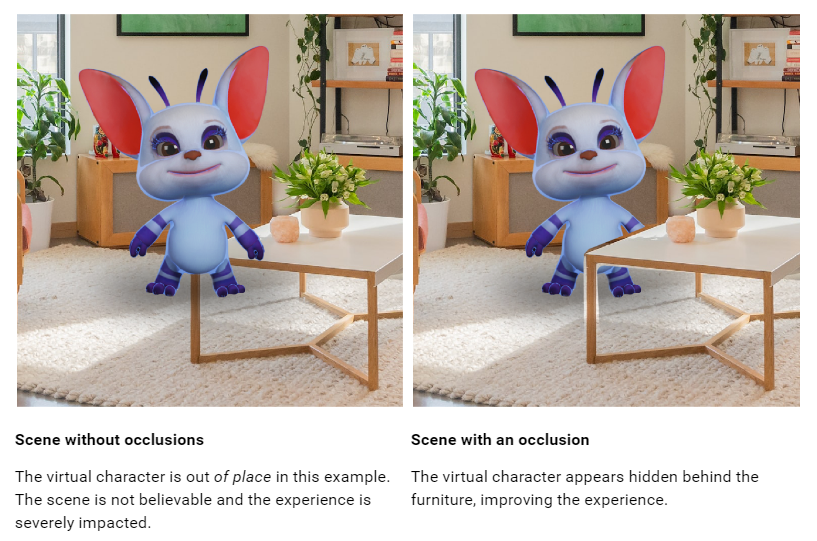

In our Quest 3 review we harshly criticized the lack of mixed reality dynamic occlusion. While virtual objects can appear behind the crude Scene Mesh generated by the room setup scan, they always display in front of moving objects such as your arms and other people even if further away, which looks jarring and unnatural.

Developers could already implement dynamic occlusion for your hands by using the Hand Tracking mesh, but few did because this cuts off at your wrist so the rest of your arm isn't included.

The new Depth API gives developers a per-frame coarse depth map generated by the headset from its point of view. This can be used to implement occlusion, both for moving objects and for finer details of static objects since those may not have been captured in the Scene Mesh.

Dynamic occlusion should make mixed reality on Quest 3 look more natural. However, the headset's depth sensing resolution is very low, so it won't pick up details like the spaces between your fingers and you'll see an empty gap around the edges of objects.

The depth map is also only suggested to be leveraged out to 4 meters, after which "accuracy drops significantly," so developers may want to also use the Scene Mesh for static occlusion.

There are two ways developers can implement occlusion: Hard and Soft. Hard is essentially free but has jagged edges, while Soft has a GPU cost but looks better. Looking at Meta's example clip, it's hard to imagine any developer will choose Hard.

In both cases though, occlusion requires using Meta's special occlusion shaders or specifically implementing it in your custom shaders. It's far from a one-click solution and will likely be a significant effort for developers to support.

As well as for occlusion, developers could also use the Depth API to implement depth-based visual effects in mixed reality, such as fog.

UploadVR testing Depth API occlusion.

You can find the documentation for Unity here and for Unreal here. Note that the documentation hasn't yet been updated to reflect the public release.