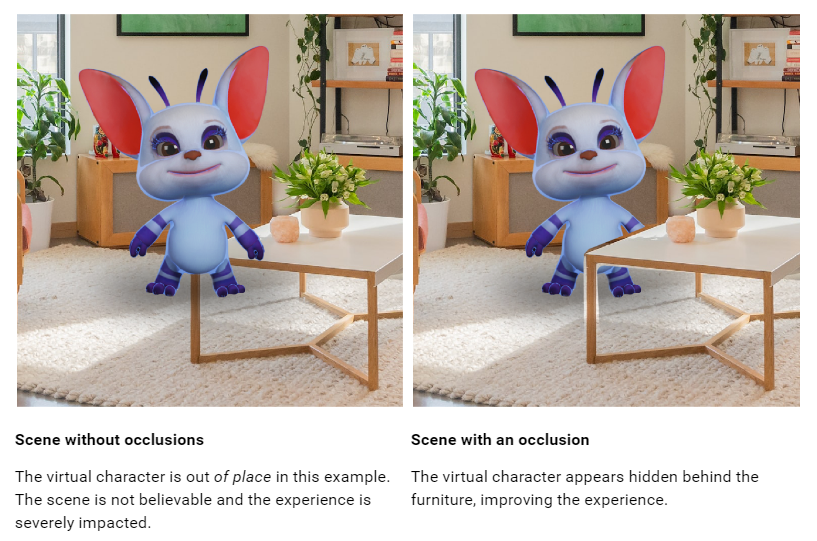

Meta released a Depth API for Quest 3 as an experimental feature for developers to test mixed reality dynamic occlusion.

In our Quest 3 review we harshly criticized the lack of mixed reality dynamic occlusion. While virtual objects can appear behind the crude Scene Mesh generated by the room setup scan, they always display in front of moving objects such as your arms and other people even if further away, which looks jarring and unnatural.

Developers can already implement dynamic occlusion for your hands by using the Hand Tracking mesh, but few do because this cuts off at your wrist so the rest of your arm isn't included.

The new Depth API gives developers a per-frame coarse depth map generated by the headset from its point of view. This can be used to implement occlusion, both for moving objects and for finer details of static objects since those may not have been captured in the Scene Mesh.

Dynamic occlusion should make mixed reality on Quest 3 look more natural. However, the headset's depth sensing resolution is very low, so it won't pick up details like the spaces between your fingers and you'll see an empty gap around the edges of objects.

The depth map is also only suggested to be leveraged out to 4 meters, after which "accuracy drops significantly," so developers may want to also use the Scene Mesh for static occlusion.

There are two ways developers can implement occlusion: Hard and Soft. Hard is essentially free but has jagged edges, while Soft has a GPU cost but looks better. Looking at Meta's example clip, it's hard to imagine any developer will choose Hard.

In both cases though, occlusion requires using Meta's special occlusion shaders or specifically implementing it in your custom shaders. It's far from a one-click solution and will likely be a significant effort for developers to support.

As well as for occlusion, developers could also use the Depth API to implement depth-based visual effects in mixed reality, such as fog.

Using the Depth API currently requires enabling experimental features on the Quest 3 headset by running an ADB command:

adb shell setprop debug.oculus.experimentalEnabled 1

Unity developers also have to use an experimental version of the Unity XR Oculus package and must be using Unity 2022.3.1 or higher.

You can find the documentation for Unity here and for Unreal here.

UploadVR testing Depth API occlusion.

As an experimental feature, builds using Depth API can't yet be uploaded to the Quest Store or App Lab, so developers will have to use other distribution methods like SideQuest to share their implementations of it for now. Meta typically graduates features from experimental to production in the subsequent SDK version.