SIGGRAPH is coming up the week of July 24 in Anaheim, CA. The computer graphics conference dates back decades, functioning as a showcase for technical advances that push forward the state of the art in movies, video games and everything else we make using computer graphics.

More than ever before, though, VR is becoming a central theme at the event. I reached out to ACM, the organization that runs SIGGRAPH, to find out what’s new this year and how VR is changing the conference. I heard back from Denise Quesnel, chair of the VR Village at the conference.

According to Quesnel, around one quarter of all the courses offered at SIGGRAPH include material related to immersive reality now and this year’s event will offer the “most interactivity ever seen for immersive realities at SIGGRAPH, especially in terms of getting hands-on with demonstrations and getting a sense of embodiment and presence in VR.”

Tickets cost between $150 to $250 to attend the VR Village where a majority of the virtual reality-related technologies are on display. Below is our edited Q&A with Quesnel, who outlined what to expect from VR at this year’s event.

Q: How is VR’s presence at the conference different this year from the past?

Denise Quesnel: At previous SIGGRAPH Conferences, it was common for VR and AR themed presentations, demonstrations and exhibits to have a predetermined focus area, both in terms of physical space and also conceptual space. What we are seeing this year is an incredible opportunity for permeation of VR into every program, and that is exactly what has happened. With VR and AR now more commercially available and having caught the attention of multiple industries, it is impossible to come to any SIGGRAPH program without finding an AR/VR focus.

We are doing two brand new things this year, one is a new talks format called Experience Presentations in which experiential content in VR Village, Emerging Technologies, Studio and Art Gallery are delivered into a talk. Some presenters are going to incorporate VR right into their presentations for the audience to see, and topics range from ‘Artistic VR Techniques’ in which Oculus Story Studio is going to present on how an artist may complete volumetric illustration in VR with the Quill tool, to a breakdown of the creation of ‘Bound’, a demoscene VR game for PS4 created by Plastic Studio. We have ‘Production for VR’ where the Baobab team of Eric Darnell and Maureen Fan will present on agency and empathy for characters in their VR film ‘Invasion!’, Stephanie Riggs and Blair Erickson are talking about integration of human-computer interaction for immersive storytelling; Joe Farrell from Tangerine Apps is going to talk about making the Jungle Book: Through Mowgli’s Eyes VR Experience in just 8 weeks; the team from Magnopus will present ‘The Argos File’ VR series, which combines live action from the Nokia OZO camera system with VFX and game engine mechanics.

For programmers and individuals interested in rendering, there is ‘The Art & Science of Immersion’ which dives into bringing Google Earth to VR; the creation of Quill from Oculus; and Qt 3D, a data driven renderer. And finally there is a health-oriented VR presentation “Agents of Change: Creation of VR for Health and Social Gain”. This features a team from Nationwide Children’s Hospital and Ohio State University who have created VR for pediatric pain management application, and Dr. Walter Greenleaf, a VR pioneer out of Stanford University who will speak on how VR Technology will transform healthcare.

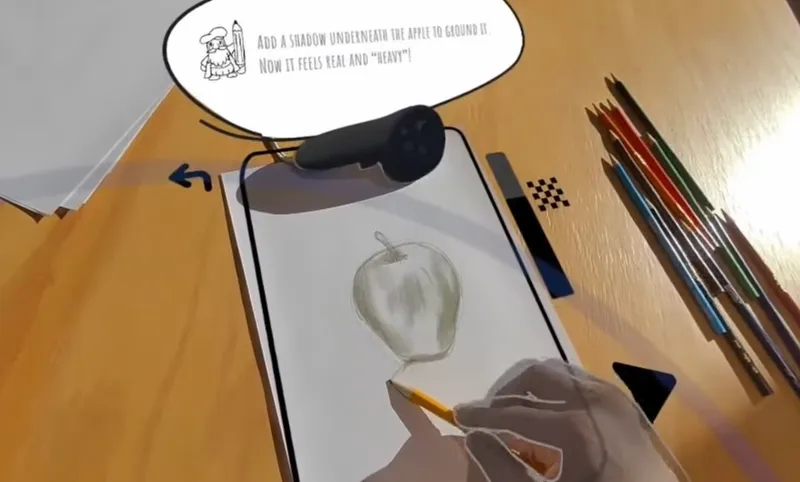

The second new development is the creation of the VR StoryLab, a space where attendees will be able to experience 11 narrative-driven experiences in a circuit. The idea here is to get people trying many different types of immersive story experiences in one go, in a 45-minute block. Essentially attendees hop from one station to another, and experience content like “Invasion!’, Google’s ‘Pearl’, create illustrations with ‘Quill’, witness racially motivated police brutality in ‘Injustice’, and drive a rover over the surface of Mars in a terrain mapped by NASA’s JPL (just to name a few). The reason we are calling this area a ‘Lab’ is because attendees are encouraged to fill out brief surveys that give us insight into how they would like to experience immersive storytelling. This feedback is going to be critical in expanding future programs at SIGGRAPH, including the renowned Computer Animation Festival. In addition to giving us their feedback that will shape the future of SIGGRAPH, we hope conference attendees also get to enjoy a diverse array of VR narratives. These are some incredibly powerful stories being told in there.

It is going to be really commonplace to see head mounted displays at the years conference, along with tablets that can take 3D scans! I don’t mean just from contributors at VR Village or Emerging Technologies either, but conference attendees too. Whenever I get together with colleagues at least one of us brings a portable HMD. Just a couple weeks ago I was with a few friends and one friend’s Merge VR HMDs was extracted in a donut shop in Santa Monica. The shopkeeper even gave it a go. I saw this happening at least years’ SIGGRAPH in LA, primarily with Google Cardboard and Samsung Gear VR. Oculus is still extremely popular for most contributors to show their demonstrations on, since it is so robust, easy to develop for and a quality item. Likewise there are a few HTC Vive centered projects, and also PS4 projects and Google Tango. I love the democratization of all these different technologies we are seeing come together at SIGGRAPH 2016; Google Cardboard will be available for attendees to take home and quite a few workstations are going to receive a boost by NVIDIA with their latest graphics cards. Ideally we are going to be showing all the demos in the maximum available FPS and graphics quality possible.

It has been an incredible ride seeing how far everyone has come since the first early development kits landed in the hands of developers. For a while there was a lot of fixation on the tech, and I feel like this has really been the year where people are stepping up to the next level with content creation and experiences that previously only existed in the imagination.

Q: Which projects are you most impressed or surprised by?

Denise Quesnel: I am just completely blown away by the level of detail and insight going into immersive realities projects in general now. By detail, I mean everything from the visuals in the immersive environment and audioscapes, but also detail committed to the user interface and interaction design.

One project in particular called ‘Parallel Eyes’ uses first-person view sharing (through eye tracking hardware and software) to explore how humans understand and develop viewing behaviors. Each user can see another’s’ first-person video perspectives as well as their own perspectives in real time, up to four people at a time. They are setting up a large, untethered (nomadic) VR environment complete with cardboard partitions where attendees can play ‘hide and seek’. I think people are going to really enjoy this. I am also thrilled that we have the first screening of “GHOST IN THE SHELL: THE MOVIE Virtual Reality Diver” outside of Japan at the VR StoryLab. The visual and embodied experience of this project is unbelievable. I am impressed by the team behind ‘Synesthesia Suit’, who are providing a full body immersive experience to attendees in a VR environment they have created, coupled with suits that have vibro-tactile sensations on the entire body. They are also bringing ‘Rez’ for PS4 to experience with their suit also.

I think the VR StoryLab films, and also the Experience Presentations I mentioned are going to be extremely in depth and critical in sharing key knowledge on the topics being discussed. They were chosen with care, mainly in order to fill knowledge gaps that are present in industry today. There have been a lot of previous Panels and Talks that dive into general VR topics, and have a round table discussion. This year I really wanted to get into as much detail as we could within the time provided on creation of VR and AR in a wide variety of application and creative approaches. This meant staying away from more generalized topics and instead focus on the technical and conceptual details of VR/AR as it matures. These are going to be really popular sessions; if last year was any indication the production talks in particular were full to capacity.

Q: Are you seeing VR being used differently now than in the past?

Denise Quesnel: I mentioned earlier how impressive the amount of detail in the user experience and interaction design has become. I find that the projects that incorporate gaze tracking, EEGs, heart monitors and other biofeedback methodology are on an amazing path to success. There is a great opportunity here to explore bioaffective computing inside a VR environment, through the use of biofeedback tools. Since already a user will be wearing a head mounted display, possibly headphones, microphones or other technical gadgetry, building biofeedback/haptic/sensing tools into this hardware and software isn’t adding much more in terms of wearable gear. In previous years with early tech, getting this data and programming it into real-time environments was inelegant, but today it is incredibly straightforward and effective. We have an installation at VR Village called “Inner Activity” from School of Cinematic Arts, University of Southern California that combines a wearable tactile bass system called the Subpac with their custom VR environment. Their use of virtual reality and infra-sound based therapy techniques is being explored as a healthcare application that utilizes concepts from meditation.

Likewise Disney Research is bringing a demo called IRIDiuM (Interactive Rendered Immersive Deep Media) where they utilize highly detailed, immersive content in real-time according to the user’s tracked head pose, but then they take it to the next level: data from IMU (Inertial Measurement Unit) is used to track the head and upper body, while EMG (Electromyogram) sensors detect hand gesture and object-grasping motions. Their real-time solver then estimates pose from these sensors to deliver a rich deep media experience.

It is also becoming increasingly common to use eye trackers in HMDs, and there have been a few instances of EEGs built into displays. Eye and gaze tracking is fairly straightforward in VR and AR, and while EEG data can at times be ‘noisy’, there is a huge amount of work being done to analyze and ‘denoise’ EEG raw data signals in real-time. It is pretty incredible to think that VR environments can respond to your stress levels, attention, or even help improve memory and learning. Neurofeedback is fairly new in its application for the mainstream public, and it shows massive potential in the immersive realities.