One, two, three… I counted slowly in my head, relaxing myself. As I did I saw my orb of light begin to grow, as my defenses strengthened against my opponents attack. I was currently engaged in an all out ‘battle of the minds’ with the man next to me, using our brainwaves to control beams of energy as they clashed in an epic tug of war. My defenses fully restored, I moved on the offense, focusing my mind, willing the beam to push harder against my opponent’s. I strained as he did, pushing back in forth, arm wrestling energy beams with our brains. Channeling my inner Gohan, I pushed as hard as I could channeling my ‘ki energy’ for one final attack finally overwhelming my opponent to the delight of MindMaze CEO Dr. Tej Tadi.

Founded in 2012, MindMaze is a neurotechnology company based in Switzerland that has been focusing on combining brain machine interfacing with VR for medical purposes for three years now. The company was founded by Dr. Tadi, who has over ten years of experience in the field of neuroscience. The work they have been doing in that field so far has been fairly transformative, and Dr. Tadi was eager to tell me about it.

Walking over to a screen he started up a video. In it, a stroke patient is sitting in her hospital bed looking at a screen with a few electronic instruments around her. “She’s completely paralyzed on the left side,” says Tadi as the woman on the screen starts moving her right arm. The arm on the screen in front of her moves along as well, perfectly matching her motions. “The reason she sees it on the screen is because after brain surgery you can’t put [a headset] on sometimes,” Tadi explains. This is the first step of the therapy, conditioning the brain to associate the movement of the screen with the movement of the arm, once that has been established that is when the magic happens.

“What happens is the brain is contralaterally mapped, so the right part of the brain controls the left, and the left hemisphere does the right,” he continues as the scene shifts to the same woman moving her right arm, only this time the left arm is moving on the screen. “Now she has a right stroke, she can move the left, but when she’s moving the right hand, and she’s seeing something there, the brain area that’s responsible is saying, hey, hang on, I can still control that hand, let me start kicking some aiders in. And so slowly the recovery comes in. So it’s a game to play—which is fun and entertaining, because [her recovery] isn’t going to happen if she just does it once. She’s got to do it repeatedly to reinforce it, and there the gameification pieces kicked in.”

“It was a natural fit to do VR with neuroscience and motion capture, because it just fits together naturally”

It was that gameification of the neurotechnology for therapy that gave rise to the idea that this same technology could be applied to games in VR. “It was a natural fit to do VR with neuroscience and motion capture, because it just fits together naturally,” says Tadi. And so MindLeap was born, a subsidiary of a medical neurotechnology company looking to bring medical grade brain machine interfacing (BMI), combined with muscular data and movement data with zero latency to modern VR and AR gaming. Coming at the problem from a neuroscience angle, they have “solved a lot of the problem pathways generally faced by the VR community, latencies and immersive experiences.”

MindLeap’s vision is ambitious, but their path towards it is clear. The team has developed a novel multi-peripheral system (which can be modularized) that combines a band with dry electrodes to sense brainwaves and muscle activity, as well as a proprietary motion capture camera, all of which “fall back” on each other. Explains Tadi, “if I have movement data, muscle data, brain data, I can weigh for information, so I know that you’re going to move your hand, I can predict it from this, but I can also predict it from your muscle activity, and I can confirm it with my motion capture camera that you are moving the hand.” This process eliminates the need for complex and time-consuming configuration that exists with some other BMIs, as well as creating an extremely accurate no latency motion solution.

I had a chance to demo the technology for myself, albeit in separate pieces. This is purposeful as the company believes that it’s peripheries are something that someone could choose to use a la cart to develop with. MindLeap’s HMD in its current state by itself is not going to win any head to head battle with Oculus, Morpheus, or Vive, it simply isn’t there optically (only a 60-degree FOV) nor ergonomically. However, while the early prototype version was fairly uncomfortable, Tadi promises the sleek mock up of the final version he showed me would be much more comfortable. The mockup showed a slimmed down version of the band that was about the width of an Oculus Rift’s, the electrodes were embedded along the strap as well as along the top of the facemask, as opposed the the cage like setup in the current prototype. The one pictured is for advanced robotics like controlling an artificial arm. But optics aren’t the purpose of this project right now, “we are not innovating optics, we don’t want to get into that” says Tadi, “we want to work with those [headset] makers who are doing a better job of it.” MindLeap is looking to work with those potential partners, like Oculus for example, to integrate their technology into what already exists, “that is something that we want to do for sure.” That being said the company is working on a HMD to release along with everything else that will be “almost competitive with what’s out there.”

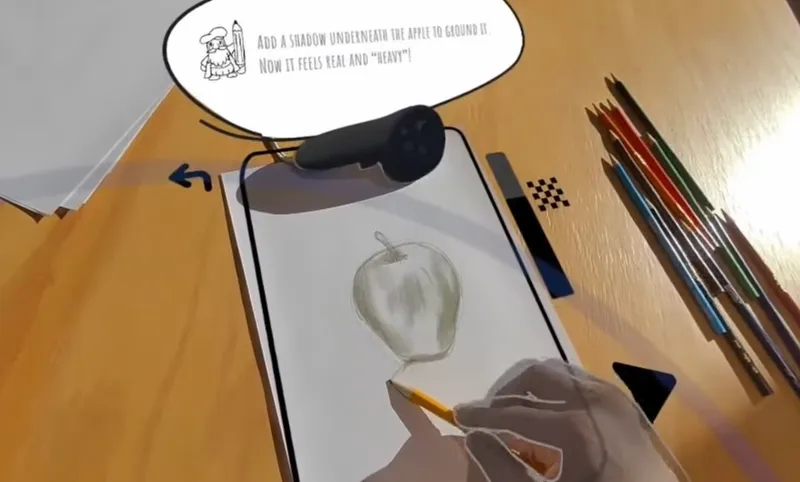

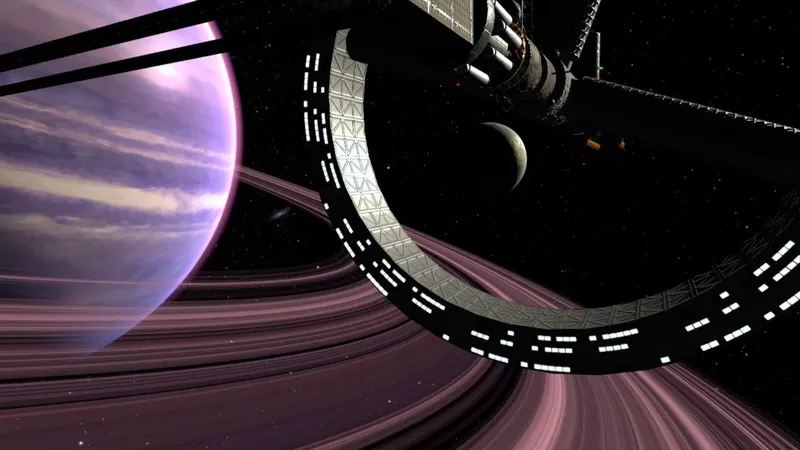

Lest you be turned off by the HMD’s optical and ergonomic short comings, the hand tracking and AR built into it are both quite impressive. For one, the headset had the ability to seamlessly switch between AR and VR. In the demo I was shown I could turn around one way and be in the real world, look down and see all of my finger tips alit with flames with smoke billowing from them. Turning around the other way, I was transported to a space environment and my hand was represented in the virtual space by a tightly cube mapped version of it. In both modes, I tried hard to break the tracking, flicking my fingers around as fast as I could trying to shake the flames off. But try as I might, only once or twice did I ever see the flames lose track of my finger tips and even then it was only one or two fingers and it picked them right back up again. The tracking very impressive, even thought the demo I saw that made use of it was fairly rudimentary.

“The bridge now is you’re not just gaming. You’re also training your brain to enhance its potential.”

Speaking towards some potential use cases for this technology in gaming, Tadi painted an example, “if you’re in a first person shooter game, getting shot at, people firing at you, you are going to get stressed and anxious. The system could automatically detect that stress and trigger a cloaking mode for example, so you would go invisible.” But it also has potential for more than that, brain training games

MindLeap is eager to get this technology in the hands of developers, who need not have a degree in neuroscience to work on the platform. “We’ve been able to bring that down to a layer where you can essentially say, oh that’s cool, I understand just the feature of it, and use it quickly. You don’t need to understand how the visual motor cognation works, how the multisensory areas work, we’ve figured it out for you. We’ve got all the timings and latencies and the science out ready so you can just say, I want these features, and then I can trigger this fire on or off.”

They are currently working on their API to get their SDK out, which they expect will be completed within the next “six months,” with a full developer kit arriving by the end of the year. They are looking to make their devices compatible with “the most popular game engines at this stage.” They also are expecting the device to be “competitively priced.”

The field of brain machine interfaces and neuroscience is incredibly fascinating, and according to leading physicist Dr. Michio Kaku, we are currently in its “golden age.” Dr. Tadi and I sat down and talked further about the future of BMI and VR. Our conversation went in some unexpectedly interesting directions, including talking about the future of brain haptics, which Dr. Tadi believes we will see in the consumer market “in the next five to six years.”

WILL: One of the things that’s really striking to me, seeing all this put together, you’re going to have people that are learning to manage their brain a lot better. You have people that play games, all the time. What do you think the social ramifications are going to be, having people so much more in tune with their own brains?

DR. TADI: Ah, I think it’ll be more harmonious, no? The thing is, it’s just like if you’re aware of your blood pressure modulations, or if you’re aware of your ECG when you’re running, and tachycardia stuff like that. That kind of awareness comes from the brain, the trick is to simplify it enough to make sure it explains the most number of states you’re experiencing at the same time. If that’s there I think people will just be able to harness it for other things. The fact that you can then intuitively understand but also get the validation, feedback, this is what is really happening with your brain when you’re doing this thing in your daily life, I think that’s what people want, not the part—it’s part of the quantified self, it’s the mental quantified self, to get the mental data in, because it’s not just about movement always, it’s motion and everything else. I think people will be more in tune with them, they will like it. That’s my take on it. The fact that you can harness the part of your brain in simple ways and understand it—yeah, we go there.

“The fact that you can harness the part of your brain in simple ways and understand it—yeah, we go there.”

The implications that we can actually go from the medical space and make that switch to lifestyle, gaming devices, that synchronization of all devices in one place, that’s going to be truly powerful for people. The fact that they’re not stuck with it, too. It’s when they choose to chose one reality they want to be aware of, have it available in one place. They’re not plugging in 10 things 10 different data types, it’s one device that’s going to do all the kind of data, the pieces they want to get. That’s one thing I think has hindered adoption in all these years. If you want motion capture, you have to put it in other computers, if you want goggles, you need. It’s so much plug in, it’s not one easy experience. Once it gets there, I think harnessing your brain is going to be fantastic.

So where’s the future for this? What are some of the remaining hurdles and things you guys are looking to accomplish in the next couple years?

For me, like I put it, it’s multisensory integration. It’s multimodal intergradations in terms of hardware that facilitates multi sensor integration. So, touch, proprioception, I still know this is my hand though I am not looking at it. So in the virtual world, I’m touching something, I still felt it, and you know my hand was positioned, that would be, in terms of the virtual reality experience, I think to go further, let’s say enhance our platforms to get touch and haptics in that picture. The long term vision for us is to enable people to completely experience VR as they should, so that’s long term vision. In general, it is to enhance the part of the brain. It’s funny. The bridge now is you’re not just gaming. You’re also training your brain to enhance its potential. You can monitor it, it’s no more of just saying you pass everything. You actually have limits that can modulate you need, train which aspects you need to train, because it’s your brain.

So you guys have provided us with the aux out from the brain. When are we going to get the aux in?

[Laughs] I think everything is aux in, in some sense. In the true sense, we’re doing it at the medical level, still. It’s a good question. For example, let’s say I move, and I had caught something, and I told you, this is how your brain reacts. Now, do you want to feed that signal back into the brain to make yourself move better? Like, you get my point? That kind of feedback loop closed? We do that, but we do that for patients, because they need to get better. So how can you enhance—I think, enhance different aspects that can come, and how long? I think five years, six years, to a level where it’s useable, in a level where it’s consumable technology and not sophisticated medical intensive care.

So basically we could expect sort of haptic feedback via brain in the next five to six years?

Actually it’s a very interesting thing. Think about this, and since you like the neuroscience piece too, there’s a thing called rubber hand illusion. What it says is let’s say you put your hand, right? And someone is going to stroke my hand and your hand synchronously, but you don’t see your hand, you just focus on my hand. It just take twenty seconds, and your brain refers the touch your feel to my hand. So you trick the brain in believing. I used VR to lets say induce out of body experiences.

“It’s funny how simply you can modulate your brain to experience different things.”

What we did is, you put your goggles on, and someone strokes your back. But in the goggles, you see your avatar being stroked in the back two meters in front of you, and then you ask them, where are you physically located? I’m there, because I see my touch there. We’re tricking touch without physically modulating touch. It’s funny how simply you can modulate your brain to experience different things.

Things like that are possible now. There’s motion capture, look at that, you can see your avatar, and you can see something being stroked, but suddenly you could see someone else’s hand in the same scene. You could probably be that’s me, it’s not my physical hand that’s moving. You can already do that in some sense.

But I would be able to feel if something is hard or soft or fuzzy?

Yes, yes, you would want to know if this was a couch, or opening a door and things like that. That’s going to make it super real.

That exists already?

In terms of—exists more in terms of the technology piece of it, wearing gloves. The way you can enhance and tweak it, it’s coming. Not for the force feedback, but tactile feedback into things, yes.

So, like, you put on the gloves and the gloves simulate that with your brain?

And then the sync is there again, exactly. Personally, I’m really excited, because I’ve seen people who have tried to do things with taste, they implant chips on the tongue, and they want you to virtually taste an apple. That’s going to be insane. We don’t do that.

Who is doing that?

That’s really basic research, it’s not out there—they’re doing taste, smell. Smell is easy to do, you can replicate that. Simple things matter too, if you’re driving a car and it’s a convertible and you feel the fans, the wind will help you feel. These basic things, things like taste, olfaction, touch, these are things that are coming, I think. That’s what it is. It’s a multisensory engine, so you can plug all this stuff up, MindLeap is going to put that stuff for you, and give you the experience you want, and that’s our vision.

—–

Interested in learning more about BMIs, VR, and Medicine working together? Check out this epic video:

And for more on VR research in the Medical field check out these (Members of the MindMaze team in bold):

G. Garipelli, V. Liakoni, D. Perez-Marcos, T. Tadi (2014) Neural mechanisms of mirror feedback: An EEG study based on in virtual reality. 44th annual meeting of the Society for Neuroscience, Washington, USA, Nov 15-19, 2014.

V. Liakoni, G. Garipelli, D. Perez-Marcos, T. Tadi (2014) Increased ipsilateral cortical excitability with mirrored visual feedback in a virtual environment. 9th FENS Forum of Neuroscience, Milan, Italy, July 5-9, 2014

M. Martini, D. Perez-Marcos, M. V. Sanchez-Vives. (2014) Modulation of pain threshold by virtual body ownership. Eur J Pain.(2014) doi:10.1002/j.1532-2149.2014.00451.x.

G. Garipelli, V. Liakoni, D. Perez-Marcos, T. Tadi. (2013) Neural correlates of reaching movements in virtual environments designed for neuro-Rehabilitation. 43th annual meeting of the Society for Neuroscience, San Diego, USA, Nov 9-13, 2013.