As reported by Michael Hicks of Android Central, a Unity panel at GDC last week revealed new details about PSVR 2, including ways developers will be able to harness eye tracking in their experiences and the performance benefits gained from doing so.

There was no full PSVR 2 reveal at GDC from Sony this year, but as we reported last week, they were showing the headset to developers at the conference alongside the Unity talk. We weren’t at the talk ourselves so we can’t verify the data, but hopefully it’ll be posted online later.

The big focus seemed to be on detailing the headset’s eye-tracking. As previously reported, PSVR 2’s eye-tracking will be able to provide foveated rendering. This is when an experience uses eye-tracking data to only fully render areas of the screen that the user is directly looking at, whilst areas in your peripheral vision aren’t fully realized. This can greatly improve performance if done right.

According to Android Central, the Unity talk revealed that GPU frame times are 3.6x faster when using foveated rendering with eye-tracking on PSVR 2, or just 2.5x faster when using foveated rendering alone (which presumably only blurs the edges of the headset’s field of view, which is a technique commonly used on Quest headsets.

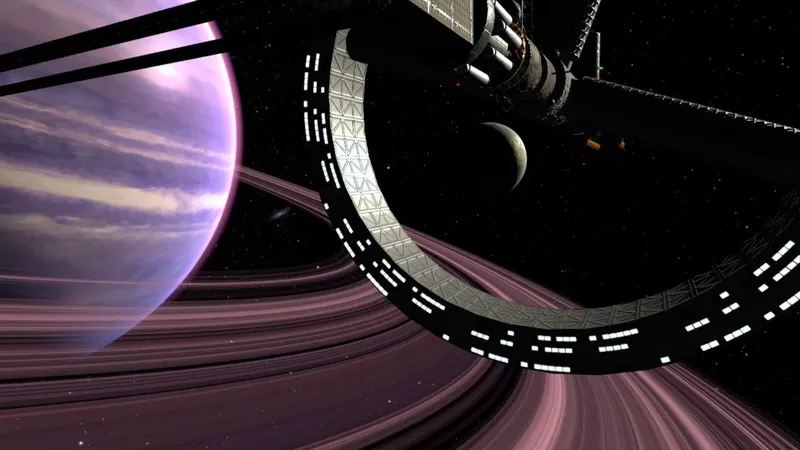

Running the VR Alchemy Lab demo with dynamic lighting and shadows on PSVR 2, frame time reportedly dropped from 33.2ms to 14.3ms. In another demo — a 4K spaceship demo — CPU thread performance was 32% faster and GPU frame time went down from 14.3ms to 12.5ms.

Moving beyond performance, developers also outlined the various ways eye tracking can be implemented into experiences on PSVR. The headset will be able to track “gaze position and rotation, pupil diameter, and blink states.”

This means you will be able to magnify what a player is looking at — particularly useful for UI design — or use eye data to make sure the game grabs the right item when the player is looking at something they want to pick up.

Developers will also be able to tell if the player is staring at an NPC or even if they wink at them, allowing them to program custom responses from NPC to those actions. Likewise, eye tracking can be used for aim assist when throwing an item, for example, so that the item is course-corrected and thrown in a direction closer to the player’s intention, based on their gaze.

Eye-tracking will also mean more realistic avatars in social experiences, and the ability to create ‘heat maps’ of players’ gazes while playtesting games. This would let developers iterate on puzzles and environments to improve the experience based on eye-tracking data.

Last month, it was reported that Tobii was “in negotiation” to supply PSVR 2’s eye-tracking technology.

In non-eye tracking news, it was also confirmed at the Unity talk that developers will be able to create asymmetric multiplayer experiences for PSVR 2, where one player is playing in VR while others play using the TV. This was something we saw used in the original PSVR, too.

For more info on PSVR 2, check out our article with everything we know so far.