Apple Vision Pro is controlled with eye tracking and hand gestures, but how exactly?

In a WWDC23 developer talk, Apple design engineers explained how your eyes and hands work together to control Vision Pro's visionOS.

Making selections and expanding menus with your eyes.

In visionOS, your eyes are the targeting system, like moving your mouse or hovering your finger over a touchscreen.

User interface elements will react to looking at them to make clear they've been selected. Further, looking at a menu bar will expand that bar, and looking at a microphone icon will immediately trigger speech input.

Pinching your index finger and thumb together is the equivalent of clicking a mouse or pressing a touchscreen. This sends that "click" to whatever your eyes are looking at.

Clicking, scrolling, zooming, and rotating.

That's obvious enough, but how then do you perform other critical tasks like scrolling?

To scroll, you keep your fingers pinched and flick your wrist up or down. To zoom in or out, you pinch with both hands close together then move each outwards. To rotate something, you do the same but upwards and downwards.

All of these gestures are guided by where you're looking, so you can precisely control the user interface without having to hold your hands up in the air or use laser pointer controllers.

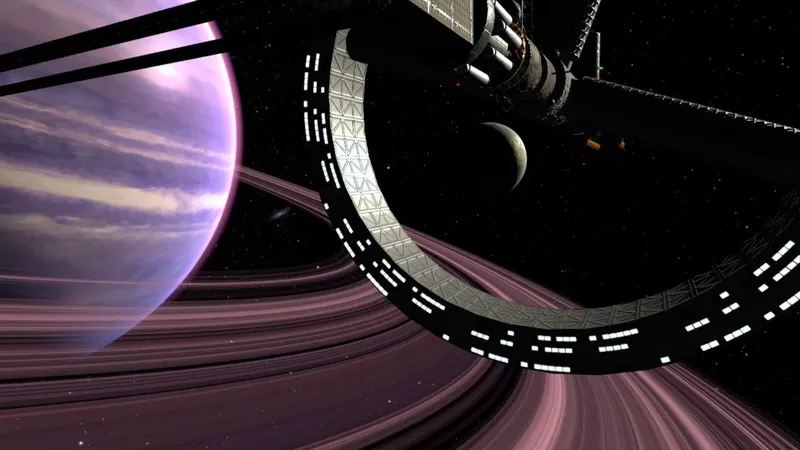

Zooming in and out of an image with eye tracking and hand gestures.

It's a strikingly elegant approach to AR/VR interaction, made possible by Vision Pro's combination of hand tracking and precise eye tracking. Theoretically Quest Pro could have taken a similar approach, but the camera-based hand tracking may not have been reliable enough and Meta may not have wanted to spend time on an interaction system that wouldn't work on Quest 2 and 3, which both lack eye tracking.

Those who have tried Vision Pro report that this combination of eye selection and hand gestures made for more intuitive interaction system than any headset they'd tried before. In our hands-on time it "felt so good to see Apple get this so right". It truly delivers on Apple's "it just works" philosophy.

There are some tasks better achieved by directly using your hands though. For example, you enter text on visionOS by using both to type on a virtual keyboard. Other scenarios Apple recommends using "direct touch" for include inspecting & manipulating small 3D objects and recreating real world interactions.

Meta is also moving towards direct touch with its Quest system software. But it's using it for all interactions, mimicking a touchscreen, instead of only for certain specific tasks. This doesn't require eye tracking but does require holding your hands up in the air, which becomes fatiguing over time.