“Go ahead and take the controller,” says a voice from outside the headset.

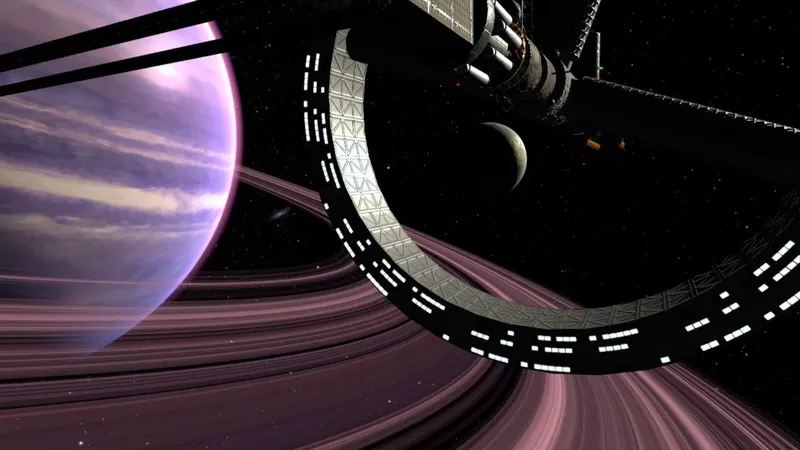

I’m standing in a red cube filled room, and the voice I am hearing is that of OTOY’s CEO Jules Urbach. I reach out and grab the Lighthouse controller in the space and Urbach starts the demo. Suddenly a window into several photorealistic worlds opens up in front of me, attached to the top of the controller. I move the window closer to my face, examining the impressive detail as I peer into each one and rotate my head and the controller. As I do, I am able to peer around the individual scenes each of which is being simultaneously rendered as a light field in unbelievable clarity.

http://www.youtube.com/watch?v=hQgHs0F0ipI

As you can see from the video, this is already quite impressive – but the real cool stuff was still yet to come. Moving my thumb up and down on the Vive controller’s touchpad I am able to scale the scene up or down, and then click down to place the scene in space. As I cycle through the scenes, each represented in a window Urbach stops me and tells me to blow a certain scene up completely. I do and suddenly I am surrounded completely by a photorealistic rendering of a certain billionaire-with-a-fetish-for-black-rubber-suit’s underground lair (in case that wasn’t enough of a hint – ‘Pow!’ ‘Oof!’ ‘Splat!’ – that should give you a sense for the era). I felt my eyes grow wide inside the HMD.

“I want to show you a little trick here,” says Urbach, “go ahead and press the button below the trackpad.”

As I do a bounds box appears around me, “that is your moveable space,” he says as I begin to shuffle within it. The box itself is really small, less than a foot in each direction of space to move within, but even being able to take a single tracked step within a photorealistic virtual environment is game changing – and a sign of what amazing things are coming for this nascent industry.

Once I had tested taking my first step in a light field environment Urbach encouraged me to test the boundaries, “go ahead and walk past the outlined bounds.” As I do, the detail in the scene begins to distort and stretch the beautiful scene no longer as crisp as it was before, instead pieces of the scene are stretched out and distorted, but they were angles I had yet to see. I pressed Urbach for what exactly was going on outside the boundaries, and it turns out it is some pretty cool stuff:

“If you think of ‘Daredevil vision’ where Daredevil doesn’t see anything but he knows the area around him using sonar, we are figuring out what the shape of the world outside the light field is by looking in the light field and tracing back from it. It’s point cloud basically, we are able to generate that data [from as little as] 1% of the light field data is enough to build that point cloud and depth information and the bigger the light field the more that we can do.”

So essentially it is interpolating the data from the light field and extending the boundaries of the scene, it is a process OTOY “is going to keep working on and improving and eventually [they] will have a mode where if you want to walk outside of that light field you can and it maybe won’t look as perfect as it did [within the bounds] but it will look pretty good.” And according to Urbach, this vision isn’t very far off, “it is something that we are working on in the next couple months.”

Unfortunately, due to the protected nature of the IP we can’t show you the exact demo I just described, but Urbach was kind enough to allow me to record another scene that also rendered the bounds, minus the not-quite-yet-ready “Daredevil vision.” This scene does a decent job of portraying the full scene that I saw but the – ahem – billionaire cave was fully encompassing.

https://www.youtube.com/watch?v=DtiUE-LIMNc

As you can see, it’s incredibly impressive.

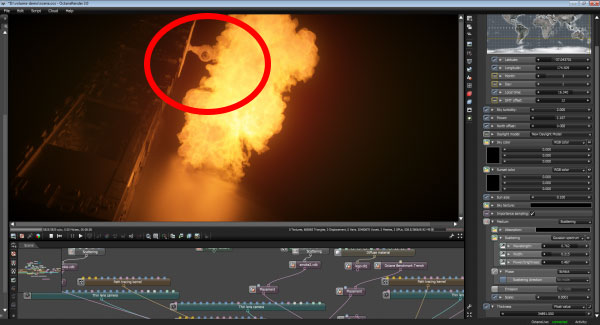

Each of the demos I was shown on the Vive were created using OTOY’s rendering pipeline which consists of the OctaneRender which is the engine used to create the synthetic light field content, and the OctaneRender Cloud which allows for “seamlessly and rapidly [produce] the rendered output with just a few clicks within OctaneRender Standalone or any OctaneRender plugin.” The result is a light field file that is actually “smaller than the Gear VR pictures,” at about “seven to fifteen megabytes per file.”

This kind of synthetically created content, Urbach believes, is the future of the medium:

“I really genuinely believe this artistically, yes you can capture stuff in the real world and yes it will look really good, especially with the ways we are doing it; but it is not going to have the same fidelity where we have the entire scene completely at our disposal on the cloud and you can create any kind of light field you want with it, you don’t have to worry about where the camera is.”

But virtual reality isn’t the only application for this kind of light field technology, OTOY is also using it in an AR environment.

Urbach turned to the light field project’s technical lead, Hsuan-Yueh Peng and asked for the Google Tango demo. Holding up the tablet, Urbach fired up one of the window scenes from the Vive, which appeared within the room we were in. Moving the tablet around the space he demonstrated the same light field capabilities we saw before in VR. It was cool but not as cool as what he showed me next, the AR version of their recent live light field capture:

https://www.youtube.com/watch?v=RlzuGmr9VPA

As Urbach explains, this is still an early prototype of their live capture system which they are looking to make into a “point and shoot system,” as well as allowing for live motion scene capture in the format.

While all of the scenes that I saw on the Vive and Tango were static, on my way out I was able to see some of the early in-motion VR light field scenes on the Gear VR that OTOY is working on, including a six-second long Star Wars demo. That’s right, light field Star Wars. The demo, which was rendered using the same pipeline as the synthetic scenes on the Vive, put me in the corridor of an Imperial starship as Storm Troopers ran past. It was crystal clear, and while it wasn’t running at it’s full frame rate (according to Urbach it was created at 240FPS, well beyond the capabilities of the Gear VR), the motion looked amazing and realistic even as I scrubbed through the individual frames. OTOY was quiet as to whether this was a piece of something bigger than a six second tech demo, but a photo from OTOY’s recent press release suggests that it may be.

The other light field in motion that I was able to see was the opening sequence from Batman the Animated Series, which is part of a project that OTOY is working on for Batman’s 75th anniversary. The clip, which also moved quickly, displayed the familiar 2D introduction in a perspective that felt totally new, and wholly indescribable, yet still followed the same familiar scene. The full project, which will take you into a light field rendering of the Bat Cave from the series, currently has no release date – although OTOY officials say it is not far off.

OTOY is aiming to bring this technology to everyone, “the way we can help the ecosystem around VR and AR is by providing everything as a service, letting people develop their own apps around it, and taking care of all the hard stuff for them.” The hard work may not be done yet, but what OTOY has accomplished thus far is stunning and is vitally important to the entire industry, bringing us a step closer towards photorealism in VR.