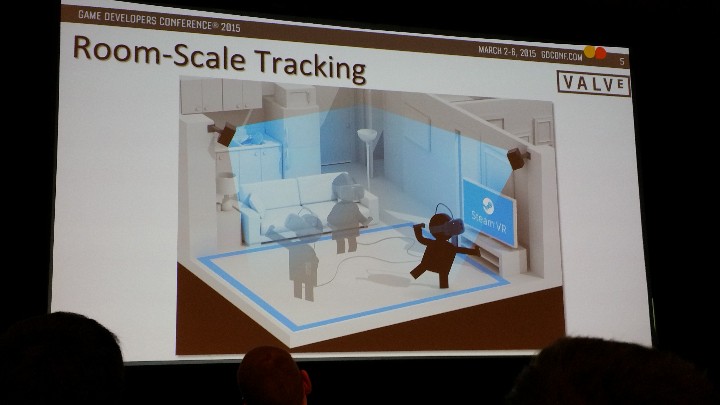

During the Mobile World Congress in Barcelona this year, HTC and Valve announced their flagship virtual reality headset called Vive VR. A few days later, demonstrations of the input tracking solution were shown to exclusive members of the press as well as developers in the VR community at the Game Developer Conference (GDC) in San Francisco. They were calling their input solution the ‘Lighthouse.’ It gathered a lot of attention and hype which spread all over the internet, yet Valve and HTC remained relatively quiet about how it all worked. However, they laid out a timeline at GDC of their previous prototypes, giving viewers an insight into the technical details of their brand new virtual reality system.

Essentially, the headset and the controllers look for wavelengths of light in order to determine where they are in a 3D space in relation to each other. The system contains two base stations that look like softball-sized speakers that are placed strategically in various corners of a room. Both the head mounted display (HMD) and the handheld controllers have sensors embedded into the outer frames that detect light coming from these devices. The headset, as according to an interview with one of the Vive VR developers, also has pass-through cameras. The devices codenamed ‘Lighthouse’ is where the magic happens. They act like a beacon system; comparable to a real lighthouse found on a rocky shore. Similar to how boat operators see beams of light coming from a lighthouse’s lantern room, the Vive VR HMDs and input solutions constantly monitor for light. Information is then transmitted to a nearby computer which calculates the positions of the headset and the controllers to an accuracy of 1/10th of a degree (as mentioned in the ‘Tracking and Room Scale’ section of the HTC VR website). This allows users to look around a virtual environment naturally. It also includes a ‘chaperone’ function that produces a barrier in a VR world when a person approaches the edges of real-life walls in an actual room. This prevents people from running into walls.

It is clear that the headset and controllers are picking up information from the lighthouse base stations. The wavelengths that the light is being broadcast at has yet to be identified though. Clues into how the virtual reality tracking system works can be speculated by looking at the hardware a little closer. Earlier iterations of the devices show that there are two circular components located inside the bottom of the base-stations and on one of the adjoining sides. There is also an array of what looks to be square, surface mount LEDs that light up red during VR experiences.

As our chief editor Will Mason tried out the Vive VR system for the first time at GDC 2015, I was able to watch the demo from outside the room; giving me a glimpse into how the setup works while it was running. The first thing Mason did was sit in a chair. He was then told by an operator with a microphone to put on the HMD. Calibration of the system was most likely initiated at that point in time. Syncing up the headset and the lighthouse devices together would put them on the same time frame. Mason was then directed to grab the controllers while he had the headset on. This was easy to do because of the intuitive tracking system.

Valve and HTC Have Fully reVived Virtual Reality: Hands on with the Vive

Those who have tried other virtual reality headsets like the Oculus Rift DK2 or Samsung Gear VR will notice that is hard to grab an object nearby with goggles covering one’s eyes. Sure, it is possible to glance down through the tiny slits near the nose and see light coming in front there; allowing the person to see what’s down below. However, it has always been easier to hold the controller first and then put the headset on after. That was at least until Valve and HTC approached input in a different way. Instead of using a mouse, a keyboard, an Xbox controller, a PlayStation controller, Nintendo Wii Remotes, Razer Hydras, or Sixense controllers, they decided to develop their own devices specifically for VR.

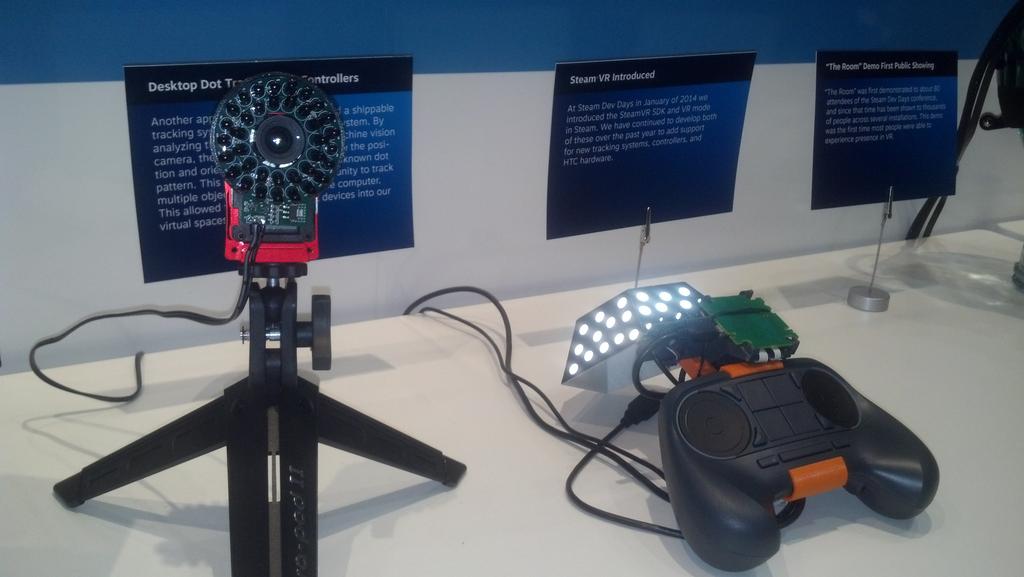

They began by modifying pre-existing Steam controllers that looked similar to an Xbox controller. The track pads were different on Steam controllers compared to other items available for purchase. Around the same time, the company was internally hacking together new controllers in order to sync them up with a desktop dot tracking system for virtual reality use. Valve needed tracking mechanisms that would be easily shippable, and there would have to be something that could analyze the output of a stationary device. At first, a computer vision camera was developed to determine the position and orientation of devices that had known dots patterns on them. This approach is described as an ‘outside-in’ way of tracking because the observer camera watches the movements of the controllers. These particular experiments were happening around January 2014 after the introduction of Steam’s VR Room.

The Steam VR Room was an attempt by the researchers to go in an alternate route with input tracking by flipping the system around. Rather than outside-in, the developers were rapidly progressing towards something called ‘inside-out’ tracking where observations were accomplished on the headsets and the controllers themselves rather than a camera. Originally, Valve’s early prototypes detected QR code-like patterns placed on the surrounding walls of a room. The marker that was always added first was known as the “Shitting Bird” because it resembled a creature of flight relieving itself from the air. It was strategically placed in the room and signified the starting X,Y, and Z coordinates (0,0,0). The additional custom codes were then put up somewhat randomly because the primary marker was the one that had the most significance.

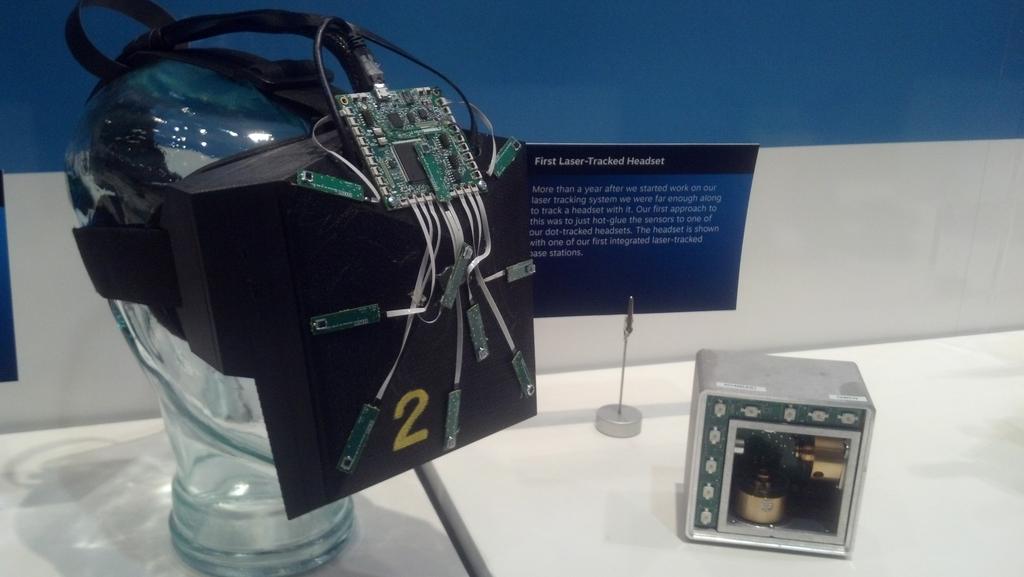

After showing “The Room” to about 80 attendees at the Steam Dev Days conference in January 2014, word starting to spread about what Valve was working on. Eventually, “The Room” was shown to thousands of people across several installations. Although the demand for a walk-around virtual reality system like this was beginning to gain traction, it was clear that fiducial wallpaper was not something the majority of gamers would be willing to put up on their walls. More than a year later, the R&D team got their first laser-tracked headset working. They hacked together early prototypes and hot-glued sensors to the front of a dot-tracked headset, turning it into a laser-tracked one.

No longer was there a need to have a camera scanning for specific patterns. Instead, the desktop camera device was replaced with small electronic cubes with light sources embedded into them. The original base stations had what looks to be LEDs along two edges of the cubes. There was also two gold, circular chambers inside. It is speculated that these two chambers have mirrors at a 45 degree angle within them, and that the chambers rotate along an axis. If this is true, then the likely scenario is that the lasers are beamed into those chambers (possibly from a series of laser diodes). Valve’s Chief Pharologist Alan Yates sent out a public post on Twitter confirming what types of lasers are being used.

Lighthouse base station emissions are Class 1 (unconditionally eye safe), while it contains class 3 sources they are enclosed & interlocked.

— Alan Yates (@vk2zay) March 5, 2015

Speculation leads us to think that the lasers reflect off of mirrors within those circular chambers inside at a 90 degree angle. This would split the initial beam into multiple beams of light. In theory, this would be similar to a technique seen at nightclubs and concerts for projecting lasers into the crowd. If Vive VR’s Lighthouse system does split lasers like this, then the headset could detect lines of light as they move across a room. The computer could determine where the sources are coming from in relation to the HMD from there. The sensors on the headset and controllers most likely pick up both the laser lines in the room in addition to the LEDs (if that is what they actually are).

If those circular components are what what we think they are, then the lasers emit from the bottom and the side. This allows for the system to sweep the reflected lasers both horizontally and vertically. When the lines of lasers reach the headset or controllers, the computer can determine how long it took for it to reach the sensors based on a calibration point. The speed of light would then be accounted for in an internal algorithm, and the sensors (potentially photodiodes) could measure out their position and orientation with that data.

UPDATE (3/9/2015 9:12pm PST): This technique of measuring for the speed of light to calculate the orientation comes from a Tested.com interview. This has yet to be confirmed though. As one Reddit user mentions in a comment thread, this may or may not be the case; but we are still researching the way the algorithm works. We will report back with any additional information we uncover.

We were tipped off to the horizontal and vertical sweeping mechanisms through a conversation on Twitter with Road to VR. Below is an informative animation by Reddit user /u/Fastidiocy. Another Reddit user /u/SvenViking shared the link to the animated GIF, which we embedded here:

As seen during the GDC demos, the Lighthouse devices are placed directly across from each other. The original Lighthouse prototypes were wired; plugged directly into a nearby outlet. The demos at GDC had the devices located on top of bookshelves which is likely where they will find themselves in peoples’ homes. The goal for the consumer version is rumored to have the devices become battery powered upon release, eliminating the need for wires. Players will still need a good sized area to fully enjoy the VR experiences because the HMD wires will require space in order to keep them from being tangled up. Multiple gamers should be able to play together in this space as well, if there’s enough room.

The sensors on the HMD and the controllers themselves appear to be photoresistors aka light-dependent resistors (LDR) of some sort that register when the lasers hit each section of the devices. According to another Tweet by Alan Yates, multiple sensors need to detect the lasers at once in order to figure out the position and orientation of the device.

One base station is enough to track a rigid object with an IMU, five sensors need to be visible to acquire a pose, but it can hold with less. – Alan Yates

Yates goes on to describe how the base stations have many modes to operate on, and that we’ve only seen one functionality of the devices so far.[1] In addition, it takes two or more visible light sources in order to track one sensor.[2] The virtual reality input solution, as told by Yates, “was designed from day one to be scalable, you can in principle concatenate tracking volumes without limit like cell towers.”[3] This means that additional base stations can be added to the input system. This would help track more complex movements if parts of the HMD or controllers become obstructed. For instance, the sensors on one device could be hidden from one of base stations but could still detect lasers coming from the others. The tracking can also detect objects that don’t have an Inertial Measurement Unit (IMU), but having one reduces latency.[4]

UPDATE (3/9/2015 11:22pm PST): With the help of Reddit user /r/JonXP, we have come up with a simple way to describe how the HMD and controllers calculate their coordinates. Instead of accounting for the speed of light, it seems like system may actually be calculating the time it takes for the lasers to sweep across a device. Let’s run through a scenario to give an example.

We can start with a line of reflected lasers pointing at the ground and moving upwards. As the line moved across the bottom of the headset, the sensors that are there detect the lasers and record the time they received the signal. The line then continues to progress upwards. As each of the sensors along the edges reads the light, it makes note of the time that it detected the light’s wavelength. Once the lasers get to the final sensor at the top of the HMD or controller, the code determines the difference in time from the first laser at the bottom and compares it to the timestamp from the one at the top.

Reddit user /r/JonXP states further along in that comment thread that “with the horizontal and vertical lines of the lasers scanning the room, you get an x and y triangulation for any two points they cross.”

Limitations of this type of laser tracking system center around the questions of what would happen to the beams of light when they bounce off of mirrors and other reflective objects found inside a room. Valve is aware of this issue, and Alan Yates tweeted that “minor reflections are disambiguated easily.” A wall of mirrors would be nasty though, but as Yates continues in his Tweet, “what the hell are you doing in VR in a hall of mirrors [anyways]?!”

Despite small reflection issues that might occasionally pop up, the Vive VR system is robust enough to track multiple objects with sensors embedded into them. The tracking is so good that it seems like Valve has solved the problems associated with input control for virtual reality. The movements are as close to being exact as one would have in a consumer VR system. It induces levels of presence never felt before on any other VR platforms (including the Oculus Crescent Bay Prototype). Valve has clearly set the standard for input here. Not to mention, they are planning on giving away the technology as well. As reported in an Engadget article, Valve president Gabe Newell compares the lighthouse devices to USB ports on a computer and expects them to fundamentally change how people interact with virtual reality. “Now that we’ve got tracking, then you can do input,” Newell said in an interview with Engadget during GDC 2015. “It’s a tracking technology that allows you to track an arbitrary number of points, room-scale, at sub-millimeter accuracy 100 times a second.”

So we’re gonna just give that away. What we want is for that to be like USB. It’s not some special secret sauce. It’s like everybody in the PC community will benefit if there’s this useful technology out there. So if you want to build it into your mice, or build it into your monitors, or your TVs, anybody can do it. – Gabe Newell

The potential for the Lighthouse tracking system seems exponential. Developers will soon have the ability to create their own devices and custom controllers with sensors detecting light from at least two emitting sources (if they are given an SDK to work with). This opens up the possibilities for VR gloves, shoes, clothes, wands, staffs, guns, and whatever else developers can think up next. Valve’s release date for the consumer version of the Vive VR system will be available at the end of this year just in time for the holiday season. With precision input tracking solutions like this, gamers will surely clear out their rooms in order to set up Valve’s VR configuration in their home. Expect the Vive VR to be a hot gifting product this year. It’s a complete package deal. The tracking is better than anything before it. So, as the great Philip J. Fry would say in the epic sci-fi animated TV series Futurama – “Shut up and take my money”, Valve. I want this now!