Meta’s prototype photoreal avatars can now be generated with an iPhone scan.

Facebook first showed off work on ‘Codec Avatars’ back in March 2019. Powered by multiple neural networks, they can be driven in real time by a prototype VR headset with five cameras; two internal viewing each eye and three external viewing the lower face. Since then, the researchers have showed off several evolutions of the system, such as more realistic eyes, a version only requiring eye tracking and microphone input, and most recently a 2.0 version that approaches complete realism.

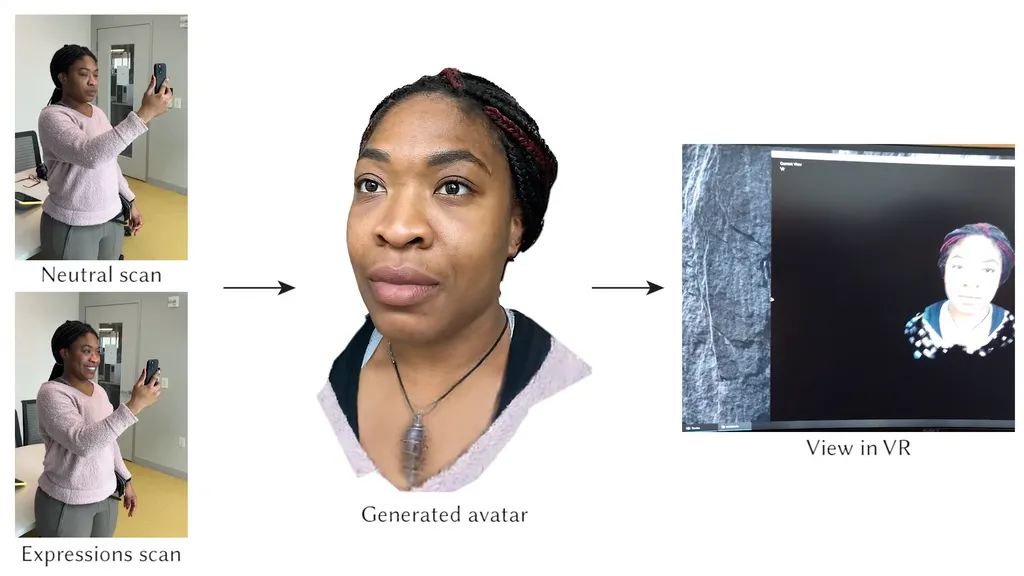

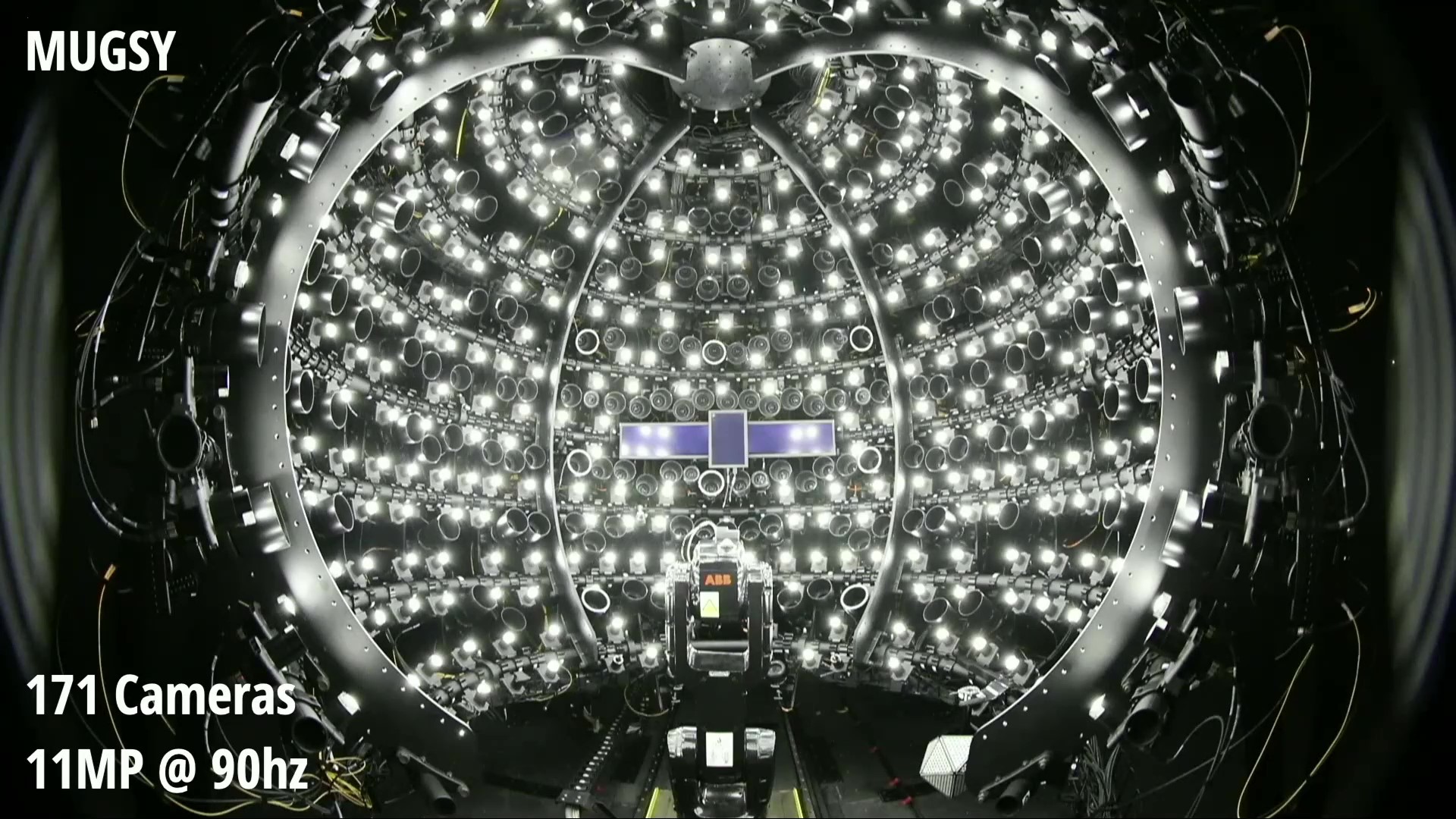

Previously, generating an individual Codec Avatar required a specialized capture rig called MUGSY with 171 high resolution cameras. But Meta’s latest research gets rid of this requirement, generating an avatar with a scan from a smartphone with a front facing depth sensor, such as any iPhone with FaceID. You first pan the phone around your neutral face, then again while copying a series of 65 facial expressions.

This scanning process takes 3 and a half minutes on average, the researchers claim – though actually generating the avatar (in full detail) then takes six hours on a machine with four high end GPUs. If deployed in a product, this step would likely happen on cloud GPUs, not the user’s device.

![]()

So how is it possible for what once required more than 100 cameras to now require only a phone? The trick is in the use of a Universal Prior Model “hypernetwork” – a neural network which generates the weights for another neural network – in this case the person-specific Codec Avatar. The researchers trained this UPM hypernetwork by scanning in the faces of 255 diverse individuals using an advanced capture rig, similar to MUGSY but with 90 cameras.

While other researchers have already demonstrated avatar generation from a smartphone scan, Meta claims the quality of its result is state of the art. However, the current system cannot handle glasses or long hair, and is limited to the head, not the rest of the body.

Of course, Meta still has a long way to go to reach this kind of fidelity in shipping products. Meta Avatars today have a basic cartoony art style. Their realism has actually decreased over time, likely to better suit larger groups with complex environments in apps like Horizon Worlds on Quest 2’s mobile processor. Codec Avatars may, however, end up as a separate option, rather than a direct update to the cartoon avatars of today. In his interview with Lex Fridman, CEO Mark Zuckerberg described a future where you might use an “expressionist” avatar in casual games and a “realistic” avatar in work meetings.

In April Yaser Sheikh, who leads the Codec Avatars team, said it’s impossible to predict how far away it is from actually shipping. He did say that when the project started it was “ten miracles away” and he now believes it’s “five miracles away”.