How close are we to holographic video for virtual reality?

In a previous article, I argued that a light field camera system would be ideal for VR, because it allows for uncompromised experiences, including positional tracking. Six months on, how close are we to a holographic system? (Six months doesn’t seem like a long time, but there has been a lot of movement in the space… also, I’m excited and impatient.)

Well… there have been some new camera systems — Ozo, Jump, Jaunt’s One, among others — but every VR camera system I’ve seen is substantially the same: a big ball o’ cameras (BBOC). Nothing remotely holographic.

But wait, you say, there are ‘light field’ cameras, right? Haven’t we heard announcements, press releases, etc? Where’s this holographic future we talked about, if we’re already getting light-field baby steps? It’s complicated. Let’s try to simplify.

[Note — This is a pretty long and in depth article, and it gets fairly dense at times. The TL;DR version: Current cameras aren’t really capturing light fields, and we won’t get ‘holographic’ playback until we have many, many more cameras in the arrays. But it’s not hopeless, and building on current systems will eventually give us great VR, AR, and even footage for holographic displays. Back to the regular programming….]

A rose by any other… Oh wait, that’s not even a rose

I think the term ‘light field’ has gotten muddled. So I’m going to try to avoid doing the same thing to the term ‘holographic’, but it’s sort of metaphorical. In fact, I asked Linda Law, a holographer with decades of experience, about the possibility of holographic light field capture. She laid it out in pretty stark terms: we need a lot, lot more cameras.

“The amount of data that goes into an analog hologram is huge. It’s a vast amount of data, way more than we can do digitally,” Law says. “That has to be sampled and compressed down, and that’s the limitation of digital holography right now, that we have to do so much compression of it.” (For those interested in learning more, her site and upcoming course cover the history and future practice of holography.)

But how much data does a hologram require, exactly? It depends on the size and resolution of the hologram… but a rough approximation: for a 2-square-meter surface, you would need about 500 gigapixels (or ‘gigarays’) of raw light field data, taking up more than a terabyte. At 60 frames per second — you wanted light field video, right? — we’re talking about 400 petabytes/hour (500 billion rays * 3 bytes/ray for 8-bit color * 60 frames/second * 3600 seconds/hour… equals a whole lotta hard drives).

Now, an important aside about holography (note the lack of quotes): a proper hologram doesn’t require a headset, because it’s literally recreating light fields. Everything else we’re talking about is trying to digitally simulate them, or use data as raw material to synthesize views. (Think about that for a moment: your credit cards all have little stickers on them that literally create light fields. The world is an awesome place.)

It seems like a fully ‘holographic’ system is a long ways off. But how close are we in human, perceptual terms? In other words, can we get close with a BBOC, and what would that look like? Let’s try to develop an intuition.

Review: what are light fields?

A ‘light field’ is really just all the light that passes through an area or volume. (The nerdier term for it is ‘plenoptic function,’ but don’t throw that around at cocktail parties unless you like getting wedgies.) Physicists have been talking about light fields since at least 1846, but Lytro really popularized the idea by developing the world’s first consumer light field camera back in 2012. So it’s an old idea that’s only recently relevant.

And that idea is actually pretty simple, once you really understand it. It turns out you’re surrounded at all times by a huge field of light, innumerable photons zipping around in all directions. Like the Force, you’re constantly surrounded by light fields, and they pervade everything. (Except there’s no ‘dark side’ to the plenoptic function: no photons, no light field. Sorry, Vader.)

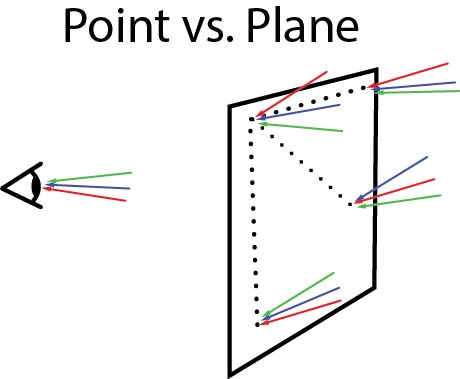

A light field is technically five-dimensional — the three spatial dimensions (say, x, y, z), plus two angular dimensions (phi, theta). For any point in space (x1, y1, z1), there are light rays that are zipping around in all directions (360 degrees total of phi and theta). And as long as the light rays are moving through empty space, they move in straight lines, so we’re talking about 2D surfaces instead of 3D volumes. So holographic light fields are 2D spatial + 2D angular = 4D total.

Here’s the less-technical, VR-specific version of it: a light field camera would capture a window into a VR world. The camera defines the outside of the ‘bounding box’ that a VR viewer can move around inside while having an uncompromised, 360 degree stereo view. I experienced light field rendering and video at Otoy, my writeup is here. (It was awesome.)

So, if you can capture and recreate a light field with high enough fidelity, the result is ‘holographic.’ And that’s useful if you’re operating in VR, because you can allow a VR viewer to have positional tracking — to move his head side to side, forward and back, look straight up or down or even tilt his head without the illusion (or stereo view) breaking. Even for seated VR, that amount of freedom helps with immersion: any time you move around without positional tracking, the entire virtual universe is glued to your head and moves around with you. Thanks to Otoy, I know this isn’t theoretical; the positionally-tracked experience is truly better, and I highly recommend it. Even seated in an office chair, the difference was obvious… palpable.

Let me tell you about our additional cat-skinning technologies

So we already established that the data requirements for fully holographic capture is completely bonkers. This is why BBOCs are doing something a little… different. Once you’ve got cameras pointed outward in all directions, you’ve got seams and stitching issues to contend with. But assuming you crack that, viewers also want positional tracking (and stereo).

(Cruel trick: if you’re demoing VR video content without positional tracking to someone, have them jump forward while standing. Their rapid acceleration, and the fact that the world stays stationary, is extremely weird… on second thought, never do this.)

The Otoy demo shows how much better VR can be with positional tracking — so does CG content played back in a game engine. It’s only live action VR that has this inherent limitation. So it’s a really, really important selling point for content, to be at least as good as computer-generated content. It’s also why NextVR, Jump, and presumably Jaunt are all doing 3D modeling and painting the resulting models with pixel data: to get you some amount of ‘look around’, so their footage isn’t obviously inferior to CG content. This is… maybe a half-measure toward holographic imaging. Okay, not quite half — more like 0.2%, actually. Let me explain more… after the break. [Record scratch.]

Continued on Page 2.

Dead-eyed models, and other beautiful tragedies

Holographic VR requires imagery of objects in every direction, but also from every perspective. As you move in VR, objects would parallax correctly, but also reflections and refractions would look correct. The image of the sky reflecting from the hood of a car, the glint off your virtual grandma’s glasses, etc.

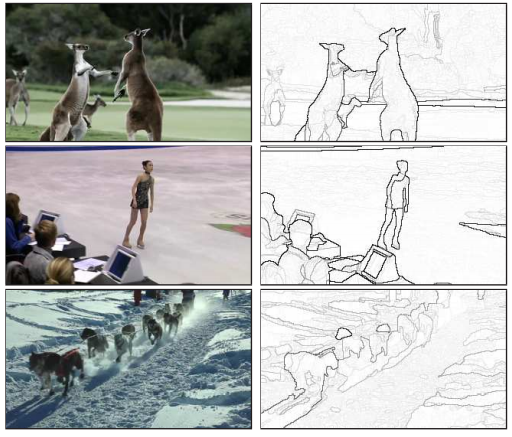

If done well, the 3D model approach can give pretty good parallax under good conditions. Computer vision algorithms can pretty reliably find object edges, calculate object distances, and interpolate how much of the background should be visible around an object at each intermediate, interpolated view.

But what this approach misses is the very nature of light fields. If the scene can be represented as solid objects painted with pixels, there’s no need for light fields: a series of 3D objects is a rather compact and effective way to describe these simple scenes. But of course, what distinguishes cheap animation from the real world is exactly that difference.

3D animators will be familiar with both the terminology and the stakes here. When we’re simulating light bouncing around a scene, we get much more accurate results if we allow the light to reflect off of shiny surfaces, rather than simply scattering. Pixel data can fake this effect: for a single perspective, the surface finish will be ‘encoded’ in the pixels captured by the BBOC, so you can ‘trick’ the eye into seeing more detail in a model with baked-in highlights and shading. In fact, there’s actually a fun analog for this in the real world: it’s how trompe l’oeil paintings work, by tricking you into thinking an object’s underlying geometry is different with shading.

(From http://www.hikarucho.com/)

Strangely effective. But… but these trompe l’oeil illusions tend to break when you start to move around; the implied geometry (and shading) is only accurate for a single point of view. As you walk around a perspective painting, the highlights and specularity will stay ‘glued’ to the model, which breaks the illusion. Also, the implied geometry won’t parallax correctly; objects won’t occlude each other or themselves in exactly the right way. Here’s an extreme example:

So the quality of these reconstructions will hinge on the accuracy of the 3D model and the subject matter, though they can’t reproduce specularity unless they’re informed by light field techniques.

Because of this, some materials simply won’t look correct, everything will look like it’s painted matte. Having a 3D artist ‘touch up’ a model captured by a BBOC is absolutely a possibility… and the best VR right now tends to blur the line between live action and CG, but ohmygod it’s expensive. In other words, BBOCs that aren’t trying for holographic reconstruction might make their subjects look… strange, barring significant retouching in post. This isn’t a small issue; the dead eyes of really bad 3D animation are partially due to a lack of specularity.

We are the 99.8%

So, I claimed the current BBOC approach is only 0.2% of the way there. As our consulting holographer Law describes it, current camera arrays have an uphill struggle.

“It’s not going to come close to what a real hologram’s going to give you… you’ve got an infinite number of views in a real hologram.” Of course, nothing ‘infinite’ is very practical, and even ‘uncountably large’ is generally just the province of the analog domain. How close can we get in digital?

Let’s use the Jaunt One as our example of a cutting-edge BBOC. The entire light field around Jaunt’s One is about 2 square meters, assuming it’s a ~40 cm diameter ball. With 16 lenses, each having an aperture that’s about 1 millimeter across (~3mm focal length divided by f/2.9), the lenses are sampling at best about 0.00005 square meters, or only about 0.2% of the incident light field. The remaining 99.8% of the light is absorbed by the cool industrial design (and the lenses’ irises). These numbers are almost identical for other arrays.

Okay, so are we sunk? If lenses require us to throw away most of our light field, maybe we’ll never get our holographic camera. Except… maybe we don’t need to get all of it.

Pretty ripples in the fabric of space-time

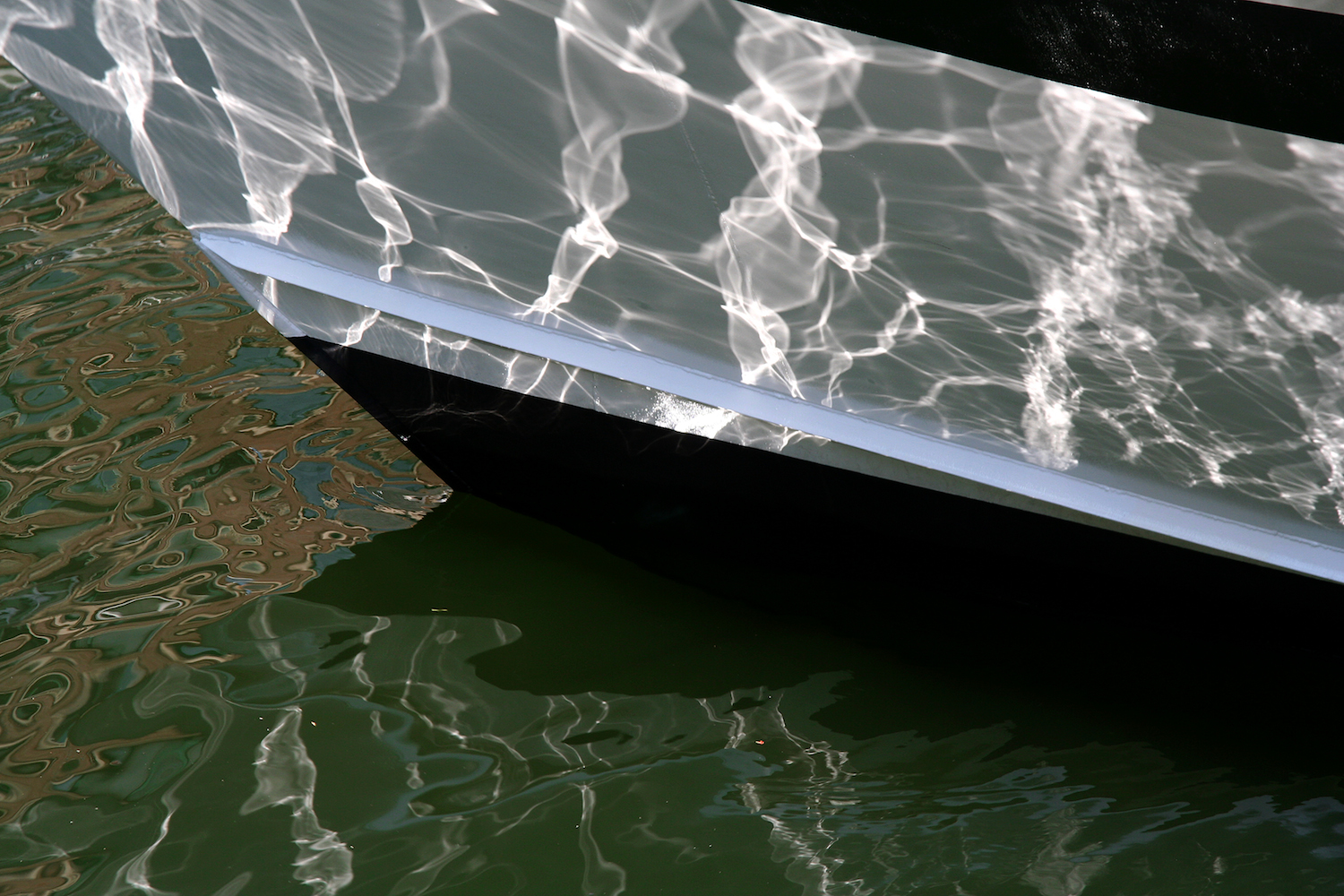

Now, it’s time to get a little hand-wavey. Our goal isn’t to literally grab every possible photon and count it, our goal is to reconstruct the incident light field well enough that it looks like we captured the whole thing. Current BBOCs don’t sample nearly often enough to do this: if a bright glint falls between cameras, it won’t be captured, and can’t be reconstructed. Can we visualize what those glints look like, to figure out how many cameras we’d need? Conveniently enough, we can:

The bright lines on the boat’s hull are places where the sun is reflecting off the water, magnified by the shape of a ripple. The pattern on the boat represents how the image of the sun changes across the surface of the boat. Now, this is an extreme case, you can literally see it projected onto a surface. But the same phenomenon is true of any high-spatial-frequency imagery: we can see the sun’s light on the boat, but if you were standing there in the same place looking toward the water, you’d see the sun, sky, clouds, probably even nearby trees and objects reflected on the water, changing with your position.

And if those reflections didn’t move correctly as you moved, or have the correct stereo perspective… it wouldn’t look like water. It might look like a picture of water, like a poster laid on the ground. It depends on how it’s handled, but I am confident the footage won’t look like water unless it’s sampled and reconstructed correctly.

That’s a pretty crappy constraint for a camera system. It’s okay if a camera system won’t perform well in low light, or flares easily, or doesn’t handle high contrast: professionals are used to these kinds of issues. But: you can’t film water? Or cars, or glass? C’mon, that’s barely a camera. At best it’s a VFX tool to create source material for animation. (This may also be why some BBOCs don’t bother with positional tracking, like the Ozo — if professionals need to animate the footage anyway, why bother with half-measures?)

So, how good does a BBOC have to be to film water? It’s going to be a sliding scale, I think. More cameras equals more perspectives equals more subjects you can film. Eventually, with dense enough sampling, it may appear perfect.

But… I want my holographic camera soon, and “probably won’t have crippling artifacts” is pretty wishy-washy. So let’s try to put a number on this thing, and figure out how far off we are. (More after the break.)

Continued on Page 3

Boxes, little boxes, ticky tacky boxes

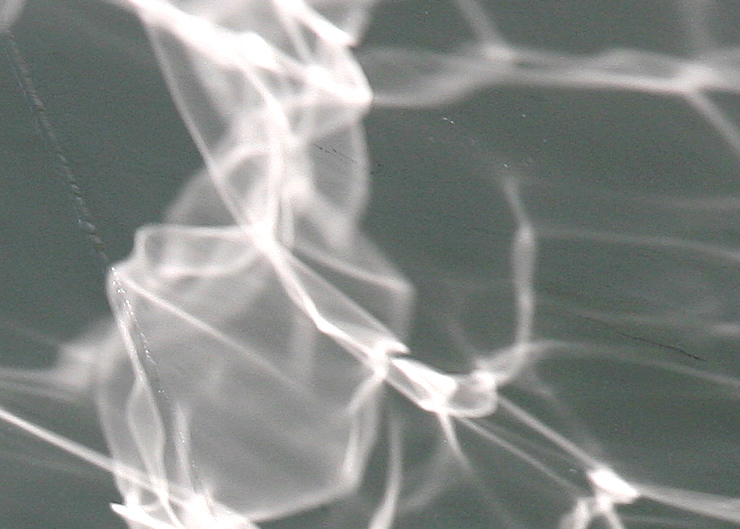

So, what’s a minimum viable holographic camera? Perhaps we can build a hand-waving intuition: grab an envelope, flip it over, and let’s start calculating. First, take a closer look at that water-reflecting image from earlier. I’ve grabbed a section of the hull that’s about the same size as today’s BBOCs: ~50 cm. The sunlight dapple-pattern will show us how much spatial resolution we’ll need:

The first image is is as-shot, which is 720 samples across. Each pixel represents a location where we would have to place a camera — and yes, that’s a silly number of cameras, but the result high enough resolution we can consider it ‘ground truth’: our eyes can’t perceive spatial differences wider than our pupil width (>=~2mm). Since the first dapple-pattern image has about half a millimeter resolution, it’s above the Nyquist limit for what we could perceive. So far, so good. That’s almost 400,000 cameras, though. Somewhat impractical.

For each resampled image, I downsampled by a linear factor of four from the previous image (for a 16x reduction in total pixels, or ‘cameras’). I also just grabbed every fourth pixel; this simulates the aperture function which throws away most of the light. In the first image, our camera array is now a merely-stupid 25,000 cameras. And the structure looks… basically identical. So far, so good: we’re not losing much by tossing out those extra 375,000 cameras, or 75% of the light.

Next I threw away 94% of the remaining cameras, so we’ve got about 1,500 in our array now, one every 8 mm or so (about the largest pupil diameter). And visually, it looks like most of the glints would be captured by at least one camera in this array, so we could reconstruct the proper, sparkly reflectance. And with only one hundred times the size of our current BBOCs!

After another 4x linear reduction, we’re pushing about 100 cameras now, and though the image is significantly fuzzier, the overall structure is still pretty visible. Will it look good enough in a headset? I have no idea; we’re literally looking at a boat, people. But it’s possible a 100 camera array would fall into the ‘good enough for us to be fooled’ category.

Finally, at an additional 4x reduction, there’s not much detail left. This, by the way, is the current state of the BBOC: this represents about four cameras to capture the spatial structure of the scene. (In a ~16 camera array, you don’t have more than about 4 cameras looking at any one object point.) You would not see real glitter or sparkle in this scenario.

So, quick caveats: first, we need to see results in an actual headset (or at least a calibrated boat?). Second, this is only one example; water, glass, smoke, reflections off of cars, calmer and rougher water will behave differently. So don’t take this test too seriously, but what I hope we’re seeing with this exercise is we’re only one or two orders of magnitude away from a more ‘holographic,’ light field-like approach. Yes, one or two orders of magnitude is a lot, but it’s not ‘infinite’; is there a Moore’s law for BBOCs?

Focusing to infinity… and beyond

We’ve focused on virtual reality in this article, but light fields are useful for a lot more. Holographic capture lets you create multi-perspective content for AR and future holographic displays, and that future isn’t far off — our helpful holographer Linda Law can rattle off a bunch of companies working on the problem: Zebra, Holographika, Leia, Ostendo, and several others in stealth mode. (And no, that Tupac thing is not actually a hologram.) All these systems are currently limited to computer-generated imagery, until we get our holographic camera.

And of course there’s AR: HoloLens, Magic Leap, others. And yeah, if Magic Leap is going to play anything on their mythical glasses besides computer-generated robots and whales, we’re going to need holographic cameras. (They’ve also been hiring holographers, for the record, so maybe they’re making cameras, too. I wouldn’t put it past them; I wouldn’t put anything past them.)

Your punchline, sir

So what have we learned? Well, all these displays need content. And we need light field capture — holographic cameras — to deliver live action content to these displays properly. This is a very big deal, because the success or failure of these future platforms hinges on content: until they have good, live action content, these platforms are akin to an iPhone without an app store, or a TV with nothing but cartoons (okay, that’s not even an analogy, that’s just what they are).

But if you can bring the real world into the virtual world, then VR/AR/holographic displays are a ton more relevant. They’re not just toys anymore, or specialist tools; they can grow to be major platforms, the future of mass media… and suddenly all those overblown predictions about VR/AR eating the world start to sound more reasonable.

So those are the stakes. That’s why we need holographic, or ‘light field’ cameras. And perhaps that’s why people are so eager to brand their cameras ‘light field,’ even when they’re clearly not — because deep down everyone really, really wants holographic cameras.

Because we all want to live in the future, right?