In today’s annual keynote, Apple announced its latest upgrade to the iPhone lineup: iPhone 7 and iPhone 7 Plus. Despite the hype and anticipation (fueled by patent filings and hirings) around Apple announcing a Virtual Reality HMD to pair along with the phone, Apple did not announce a Hololens or a Gear VR competitor. Instead, the micro-innovations in Apple’s optical technology, computer vision, machine learning, and wireless audio make iPhone 7 a device with all the right hardware upon which to build an Augmented Reality platform. All the groundwork is there for a powerful AR platform that could come with next generation hardware.

A Powerhouse in a Phone

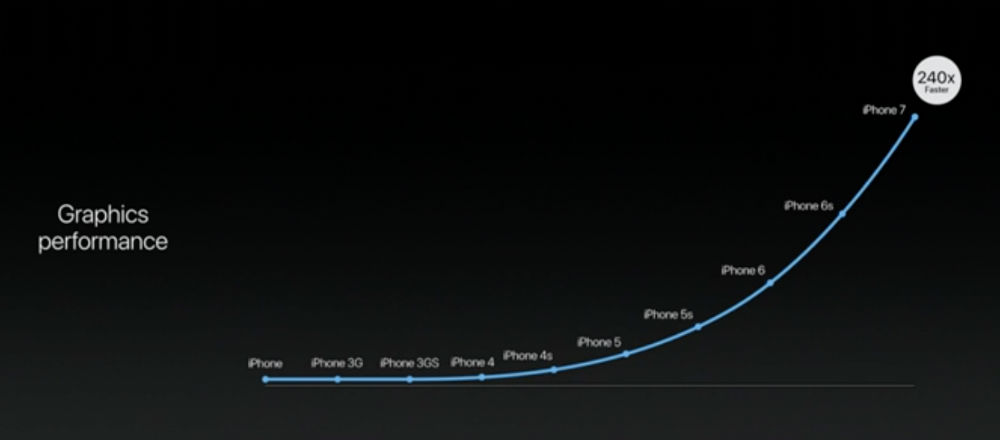

The new A10 Fusion 64-bit 4 core CPU is 40 percent faster than last year’s model, and the 6 core GPU is 50 percent faster than last year’s model, all while consuming 33 percent less power. These specs define the cutting edge of mobile processing and battery optimization, and are crucial for powering camera-intensive AR apps such as Pokemon Go. Having a powerful GPU is also important for hosting local machine learning processing within the device for computer vision, in conjunction with cloud computing for more intensive processes.

Giving Sight to the Machine

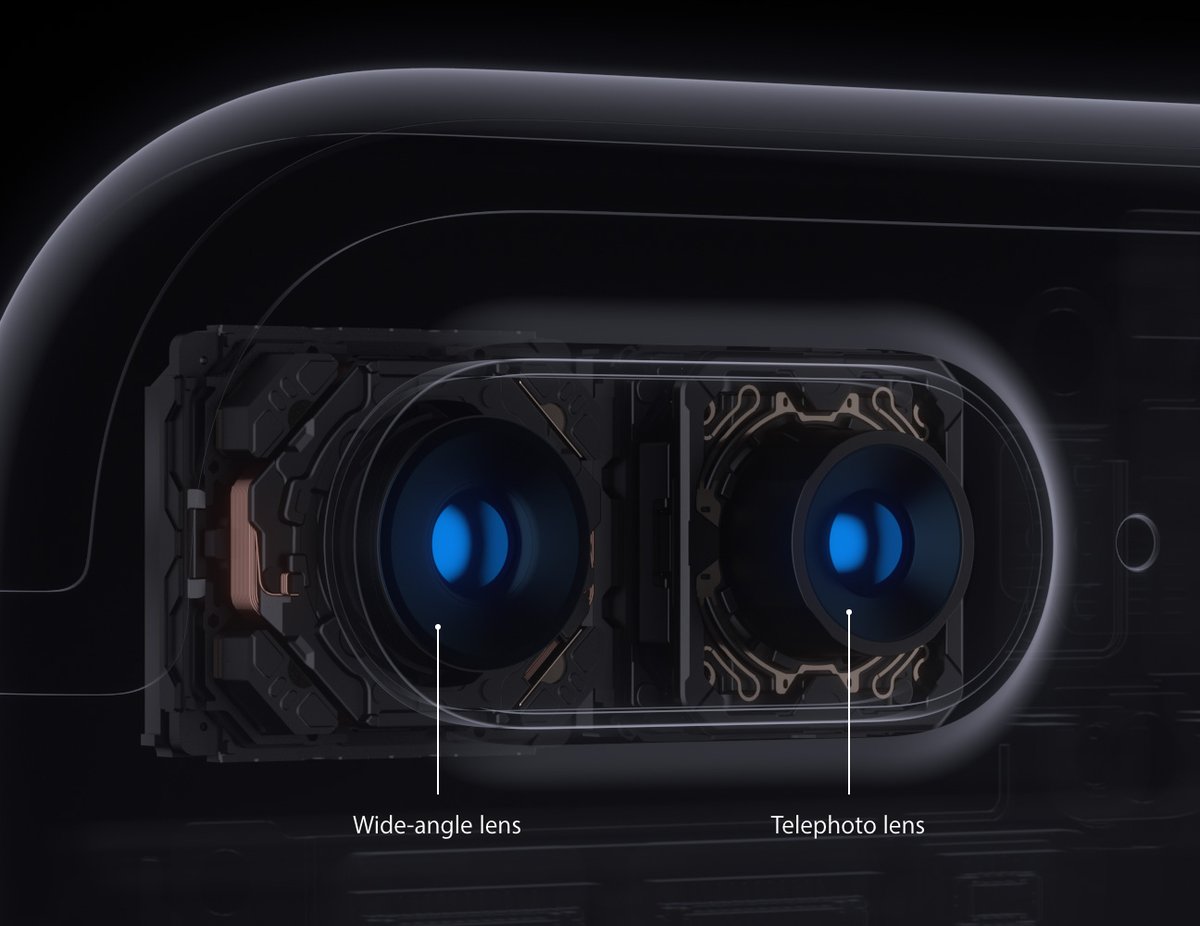

Though Apple has made aggressive acquisitions (LinX, Faceshift) of camera technology over the past few years, those acquisitions aren’t necessarily visible at first glance. Still, iPhone 7 comes with a 12 MP camera, ƒ/1.8 aperture (for better up-close macro pictures), optical image stabilization (for better low-light pictures) and with a 60 percent faster and 30 percent more energy-efficient image sensor (Pokemon Go players rejoice!). iPhone 7 Plus packs an even bigger punch with a dual lens camera system — much like LG G5 and the Huawei P9 and Honor 8 — that combined with software innovations will give you the ability to zoom into pictures like never before.

The real showstopper of the new camera system is the software behind it all. Both iPhone 7 models feature a new Image Signal Processor, using machine learning and computer vision techniques to analyze the subject(s) of your picture, making automatic adjustments necessary to get the best shot. It can even use it to recognize faces and people in the photo.

[gfycat data_id=”RemarkableFineAnkolewatusi”]

With the iPhone 7 Plus dual lens camera, Apple is working on implementing a depth mapping feature to scan the subject and environment to recognize the objects in the foreground, then implementing clever effects like blurring out the background behind the subject. This feature won’t be available at launch on September 16th, but will be coming as a free update to iOS 10 later this year.

[gfycat data_id=”AncientScalyBlacklemur”]

Cutting the Cord

Despite the months of debate over the impending drop of the 3.5mm audio auxiliary jack, Apple is shifting the standard of audio connected devices to Lightning and wireless headphones. This offers a few advantages and opportunities — from listening to high quality music, interacting with AI’s like Siri, and even opening up the potential for head tracking to become a standard feature in headphones.

By having headphones connected through Lightning, you’re utilizing a powered port to deliver music with a forceful kick currently only possible with a separate amplifier and a digital to analogue converter.

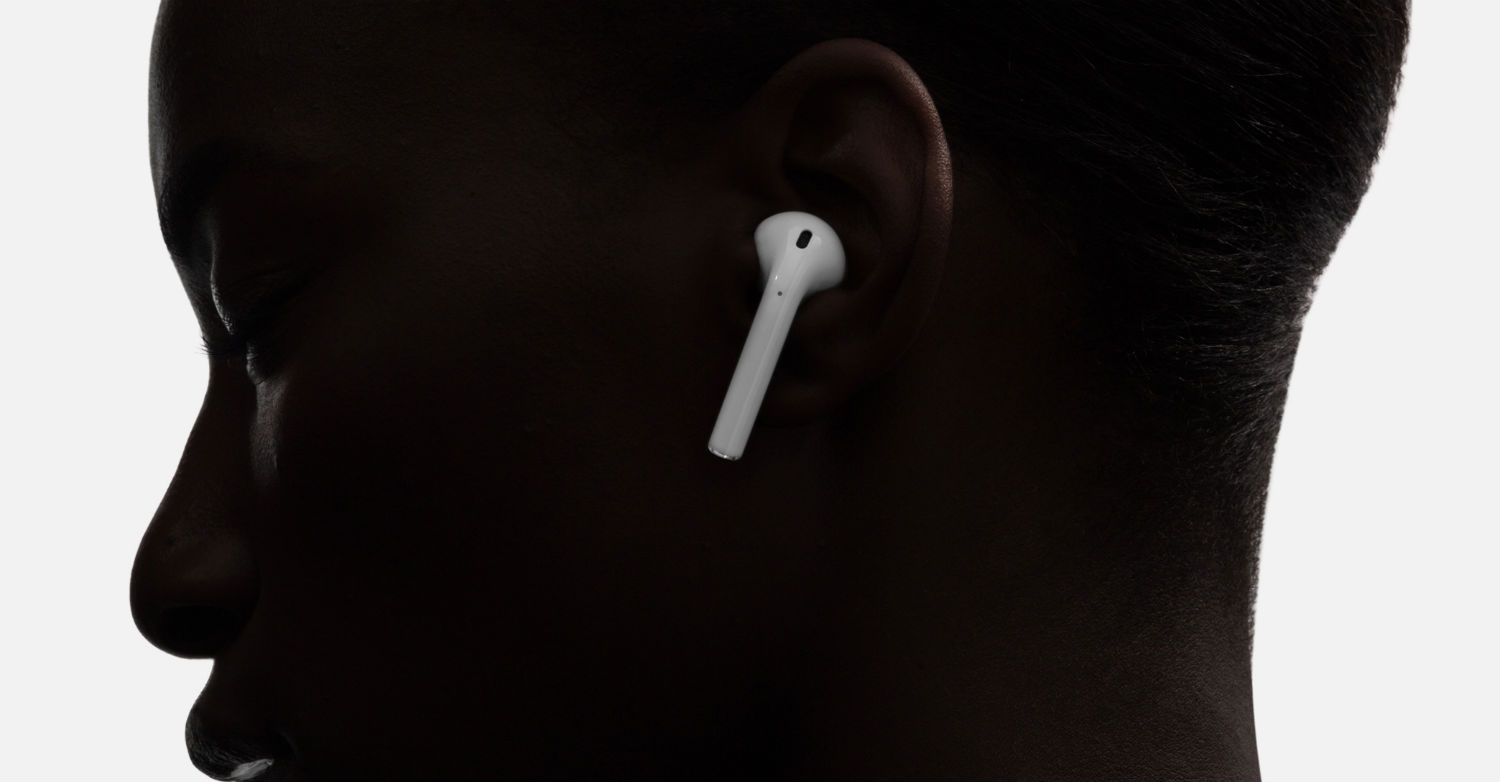

On the wireless side, Apple announced their new Airpod earphones with a custom wireless W1 chip to do onboard processing for audio input and output. The company is even supposedly developing its own custom “Bluetooth-like” technology for data transfer protocols since the current state of Bluetooth headphones are not ideal for ease of use, as well as music quality.

The most surprising addition to the Airpods are its build-in accelerometers. Apple is advertising it as a way to access Siri by double tapping the side, however we could see this expanded upon to going to eventually enable head tracking for listening to spatialized music and audio, which is a core feature of Augmented Reality technology.

What does this all mean?

Apple is still being conservative in their plunge into world of immersive technology by advertising these features mainly as photography tools, but having deeper access to the camera API could open up tremendous opportunities for aspiring AR developers to get creative.

Wondering what Snapchat will do with the depth mapping in an iPhone 7+

— Benedict Evans (@BenedictEvans) September 7, 2016

These smalls steps can already have an impact on the apps and platforms we use every day. The two biggest Augmented reality platforms on the iOS App Store are Snapchat’s “Lenses” with their facial filters, and Pokemon Go. By having access to the depth map of an environment, theoretically, Pokemon could come alive by popping up around corners and hiding behind shrubs, and place Snapchat Lens elements around your room or on your friends’ faces.

Spent past 4 yrs pitching “the day iPhone has a dual camera” & what it means for the 3D world. Excited it’s today. pic.twitter.com/7cjVeFrhK9

— alban (@albn) September 7, 2016

These are the simple, low-hanging use cases for the potential applications of this technology. Another would be having the ability to scan your environment, to create 3D photogrammetry models of your room so you can export to services like IKEA and previsualize your next remodel. If any of these come to fruition with iPhone, Apple will be in similar field as Google Tango and Intel RealSense, but in a much more efficient and battery-conscious way.

Today’s announcements lay the groundwork for a world where every Apple device will share the same “reality”. The depth mapping, computer vision, and machine learning abilities of iPhone 7 Plus are incredibly significant for Apple’s jump to the next computing platform. Don’t be surprised if Apple announces something even more significant next year, for the iPhone’s 10 year anniversary.